As 2025 draws to a close, the debate around the best AI in 2025 has become increasingly data-driven rather than speculative. With only days left in the year, evaluation rankings and market signals have largely stabilized.

Based on current LM Arena data, Gemini-3-Pro stands out as the most likely model to finish 2025 at the top of the leaderboard.

To assess this outcome, I use Powerdrill Bloom to analyze observable rankings, evaluation data, and residual sources of uncertainty within a widely referenced evaluation framework.

How “Best AI” Is Defined and Evaluated in 2025

In this analysis, “best AI” does not refer to a single benchmark score or a marketing claim. Instead, it is defined by overall performance on the LM Arena leaderboard, which aggregates large-scale, head-to-head human preference evaluations across multiple task categories.

LM Arena is not only widely cited in research discussions, but also used as a settlement reference by prediction markets, making it a practical and outcome-relevant evaluation standard rather than an abstract one.

To assess year-end outcomes within this framework, I use Powerdrill Bloom to analyze ranking stability, vote volume, category coverage, and remaining time to settlement—factors that materially influence whether late-stage reversals are realistically possible.

LM Arena Rankings at Year-End 2025

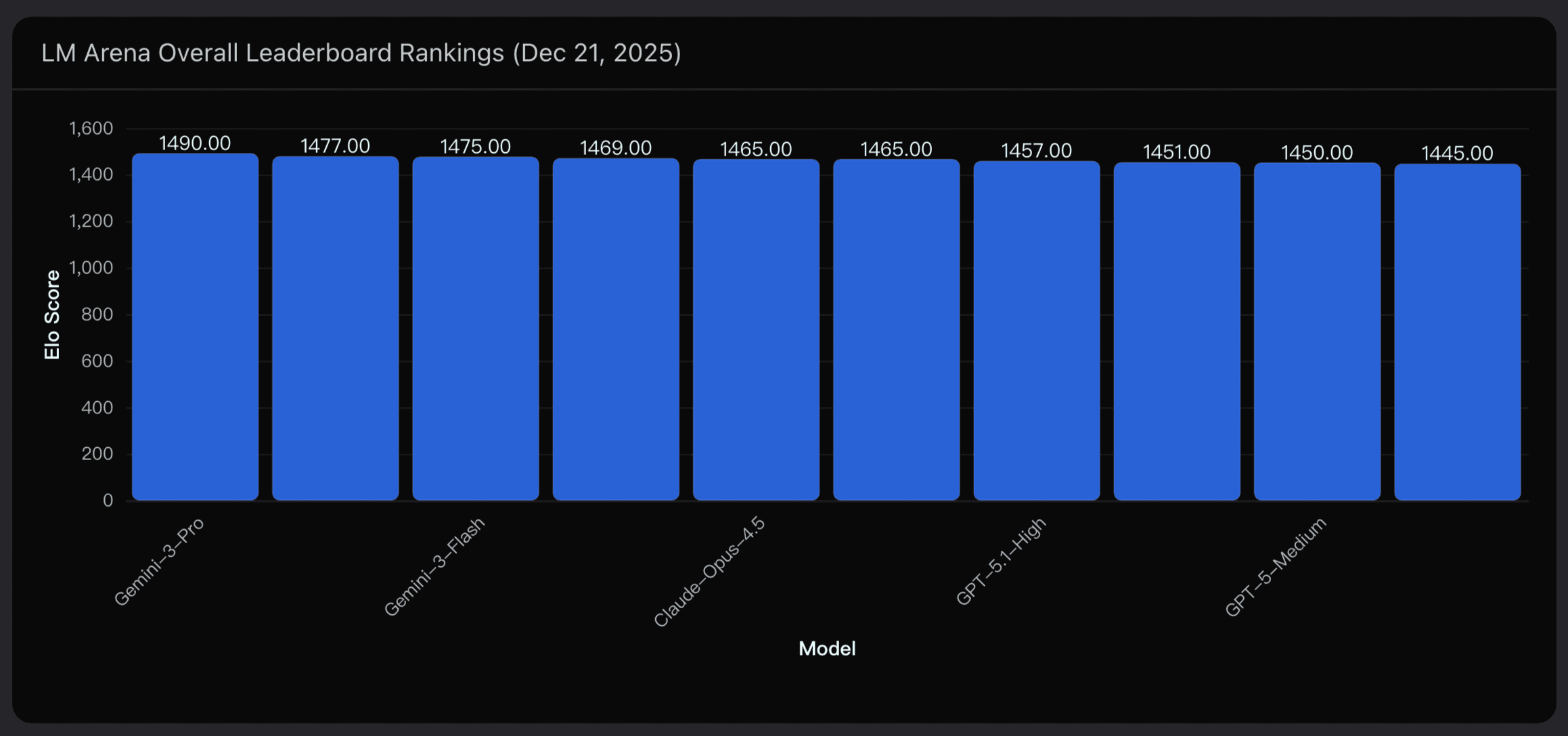

As of December 21, 2025, the LM Arena overall leaderboard shows a clear ordering at the top:

Gemini-3-Pro: 1490 Elo

Grok-4.1-Thinking: 1477 Elo

Gemini-3-Flash: 1475 Elo

Claude-Opus-4.5-32K: 1469 Elo

Gemini-3-Pro holds a 13-point Elo lead over its nearest competitor. In Elo-based systems, gaps of this size at the top of the distribution are non-trivial, especially this late in the evaluation cycle.

Equally important is timing. At the point of this analysis, only nine days remain until year-end. That sharply limits the opportunity for ranking reversals.

Why Gemini-3-Pro Leads the Leaderboard

Gemini-3-Pro’s lead at the top of the LM Arena leaderboard is not a short-term spike but the result of sustained, broad-based performance. It has held the #1 position since March 2025, building a 13-point Elo advantage that is difficult to erode late in the evaluation cycle.

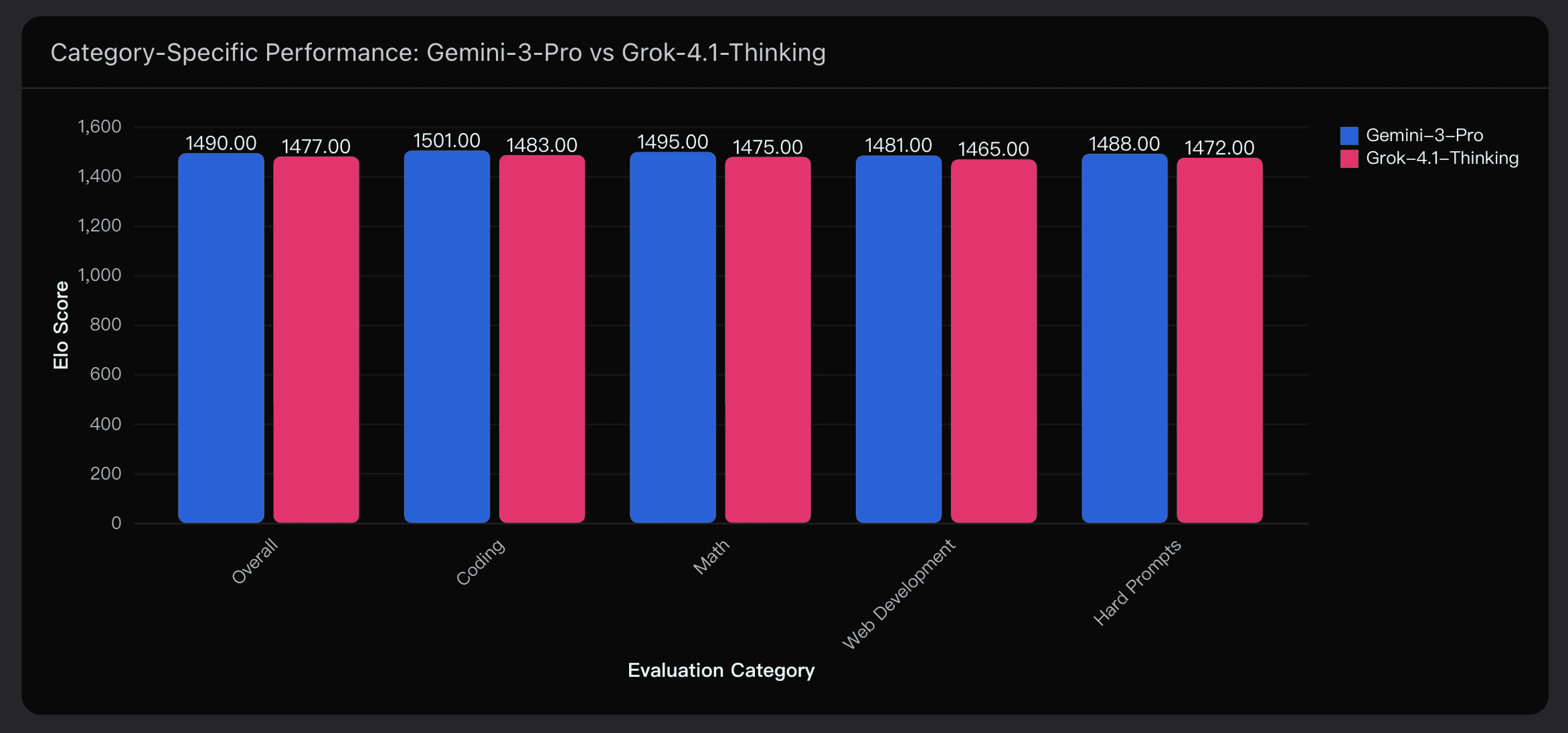

Crucially, this lead is not driven by dominance in a single task area. Across major evaluation categories, Gemini-3-Pro consistently outperforms its closest competitor, Grok-4.1-Thinking:

Coding: 1501 Elo (11-point lead)

Mathematical reasoning: 1495 Elo

Web development: 1481 Elo

Hard prompts: 1488 Elo

This pattern indicates a genuinely broad performance profile, where strength across multiple domains compounds into a durable overall ranking advantage.

Benchmark Results That Support the Ranking

Beyond LM Arena rankings, Gemini-3-Pro’s performance is supported by results from several independent benchmarks:

AIME 2025: 95% accuracy without tools, 100% with code execution

GPQA Diamond: 91.9% accuracy

SWE-bench: 76.2% on software engineering tasks

ARC-AGI-2: 31.1%, compared with 4.9% for Gemini 2.5 Pro

MathArena Apex: 23.4%, versus 0.5% for Gemini 2.5 Pro

These results reinforce the idea that the leaderboard position is not an outlier but aligns with broader evaluation data.

Ranking Stability and Market Consensus

One of the strongest signals in this analysis is ranking stability, reinforced by both vote volume and market expectations.

Gemini-3-Pro’s position is based on 19,199 head-to-head comparisons accumulated since March 2025. At this scale, LM Arena’s Elo system becomes highly resistant to short-term fluctuations.

Historically, top-ranked models require tens of thousands of additional comparisons to shift even a single position. With only days remaining in the year, the statistical inertia strongly favors the current ordering.

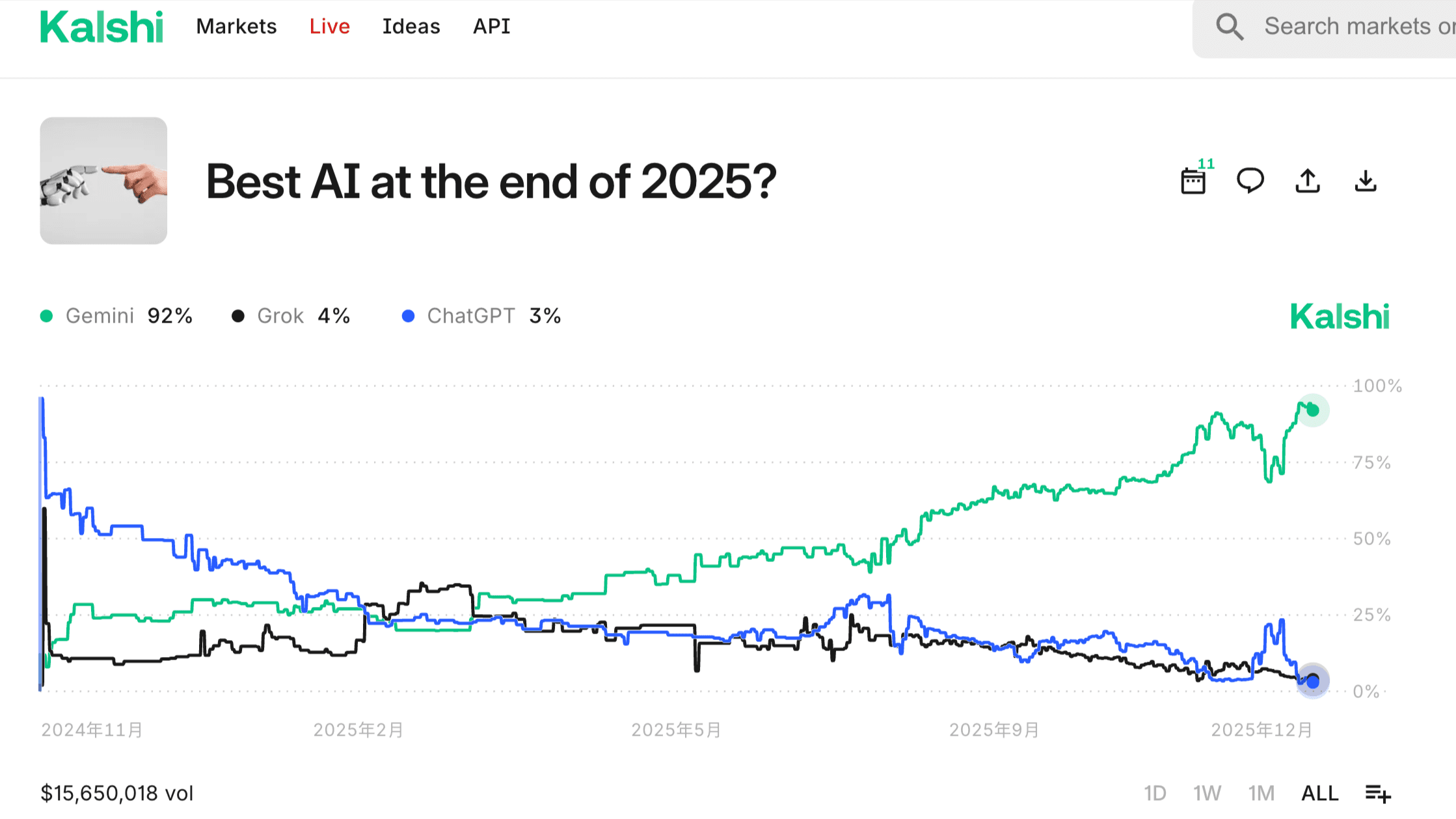

Prediction markets provide a complementary perspective. On Kalshi, which settles outcomes based on LM Arena rankings, Gemini-3-Pro’s implied probability has risen to over 75% by December 2025.

Importantly, this increase was gradual—from roughly 40% in August—tracking the accumulation of evaluation data rather than reflecting a sudden speculative spike.

Taken together, high vote-volume stability and converging market expectations point to a low likelihood of late-stage reversals.

What Could Still Change Before Year-End?

Despite the strength of the evidence, the analysis does not assume certainty. A residual 10–15% uncertainty remains, concentrated in a few low-probability scenarios:

Unreleased model launches capable of rapidly accumulating votes (~2–3%)

Methodology or prompt distribution changes within LM Arena (~2–3%)

Settlement ambiguities in the event of tied rankings (~2%)

Statistical noise at extreme Elo levels (~2–3%)

Importantly, none of these risks stem from weakness in Gemini-3-Pro’s observed performance. They are structural or procedural in nature.

Final Take: Best AI at the End of 2025

Based on the available data, Gemini-3-Pro enters the final days of 2025 with a strong and durable lead. A 13-point Elo advantage, nine months of uninterrupted leadership, cross-category strength, and high vote volume stability collectively point to a highly persistent ranking.

Using Powerdrill Bloom to organize these signals into a single analytical view makes it easier to separate meaningful indicators from noise—especially in environments where hype can easily overwhelm evidence.

Barring an unforeseen development in the final days of December, the most data-supported outcome is that Gemini-3-Pro finishes 2025 as the top-ranked AI under the LM Arena evaluation framework.

Disclaimer: This assessment reflects data-driven evaluation based on available information and does not guarantee future outcomes or definitive rankings.