SoK: On the Offensive Potential of AI

Saskia Laura Schröer, Giovanni Apruzzese, Soheil Human, Pavel Laskov, Hyrum S. Anderson, Edward W. N. Bernroider, Aurore Fass, Ben Nassi, Vera Rimmer, Fabio Roli, Samer Salam, Ashley Shen, Ali Sunyaev, Tim Wadwha-Brown, Isabel Wagner, Gang Wang·December 24, 2024

Summary

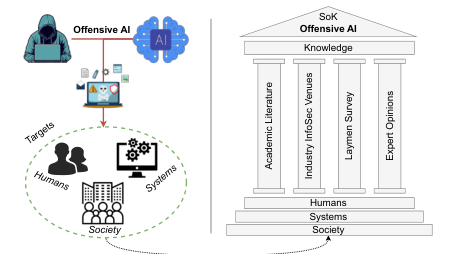

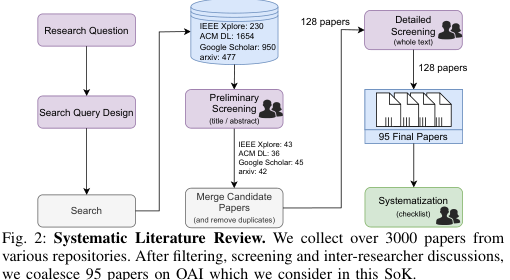

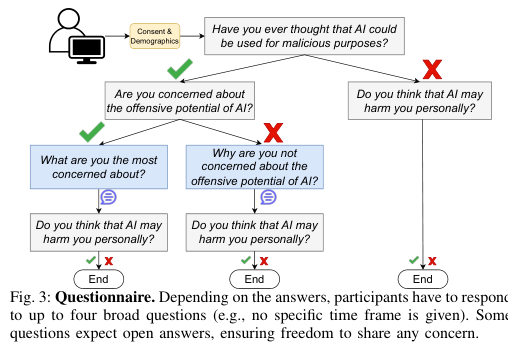

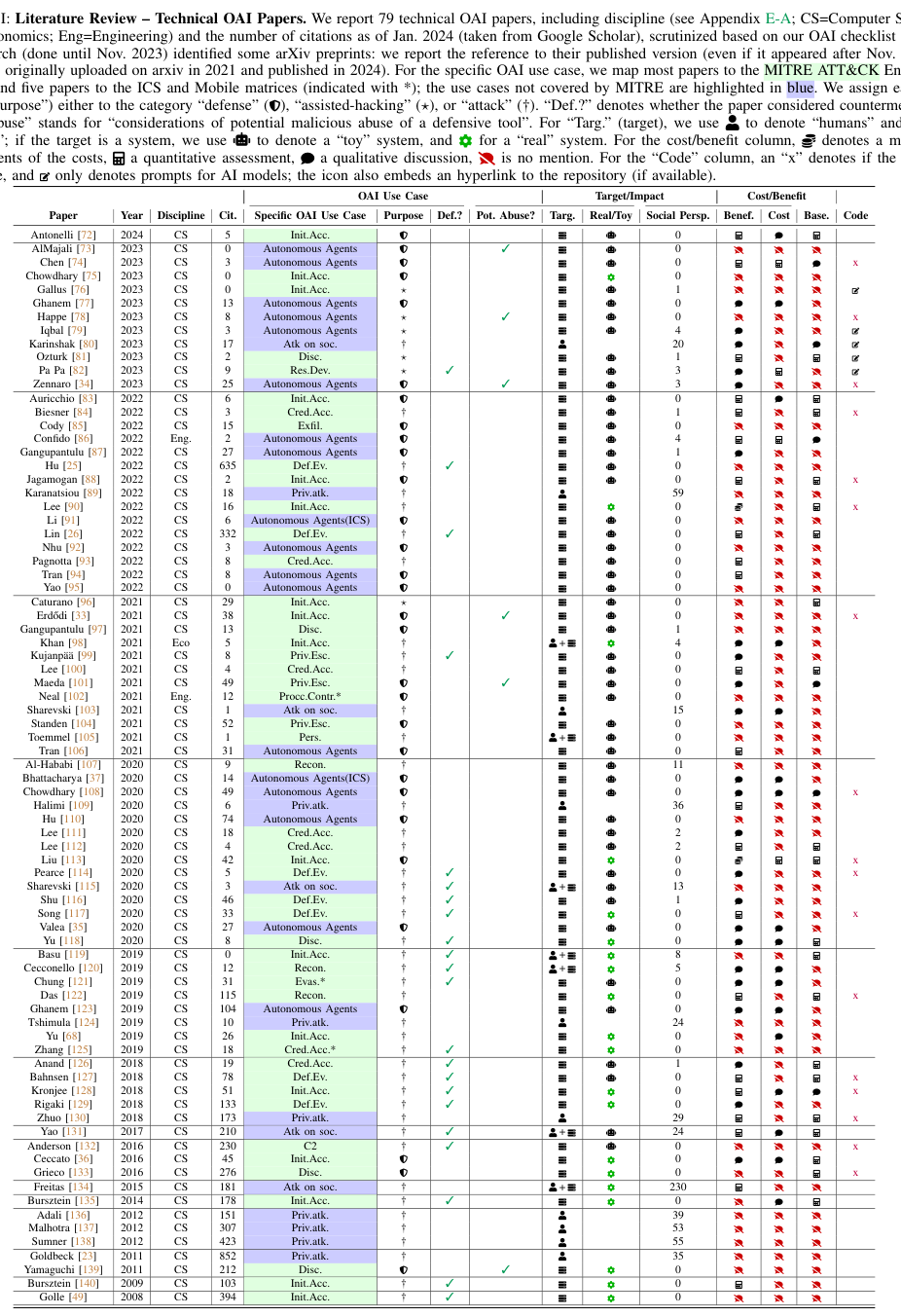

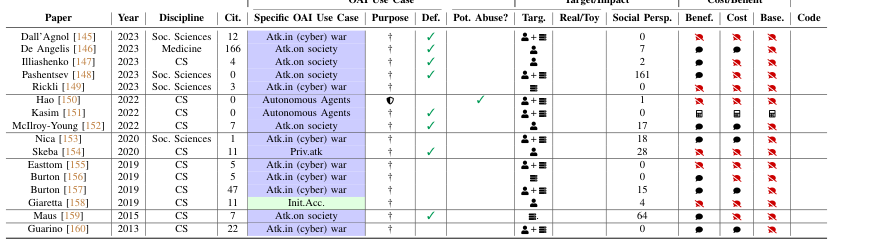

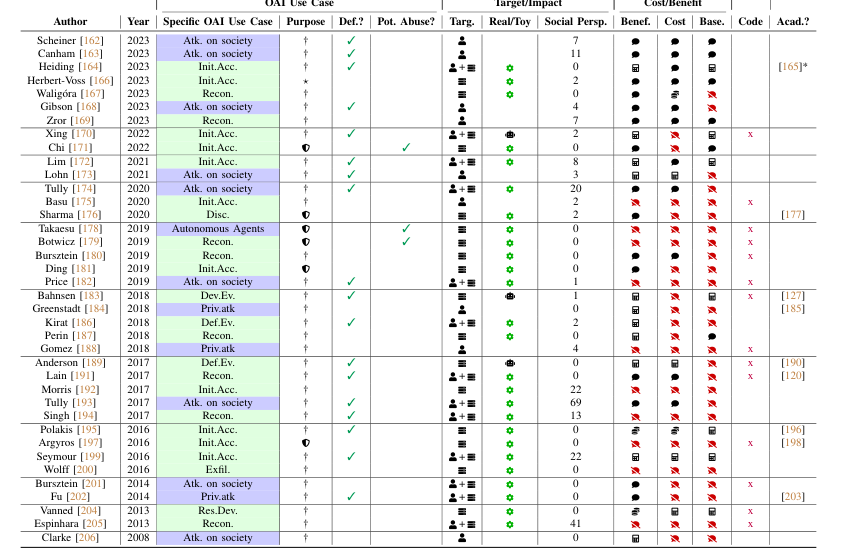

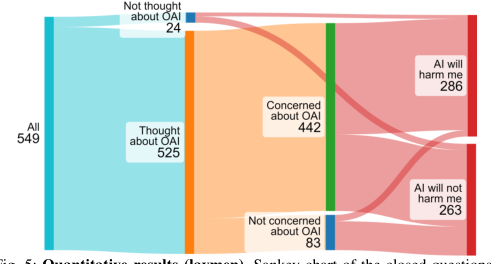

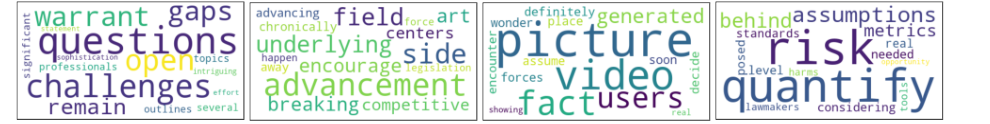

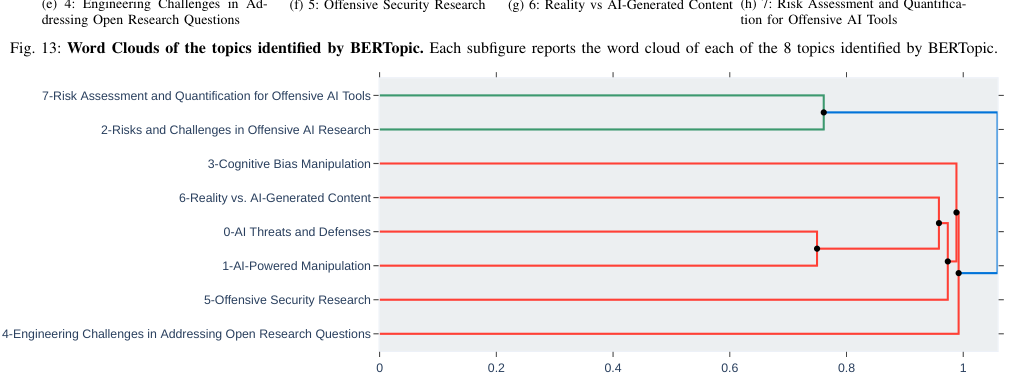

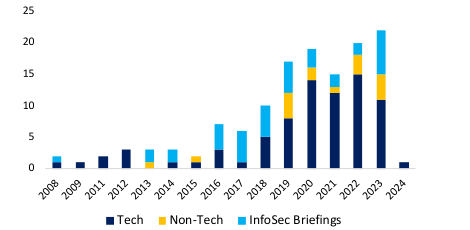

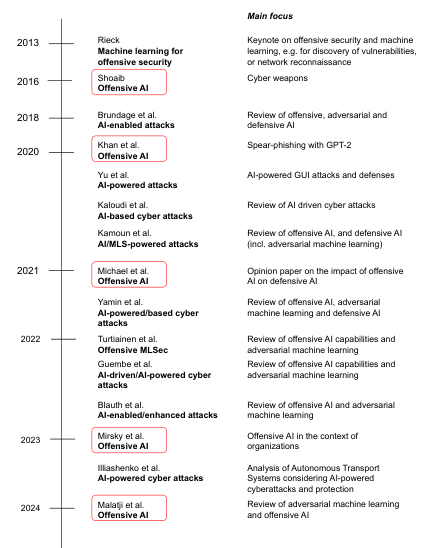

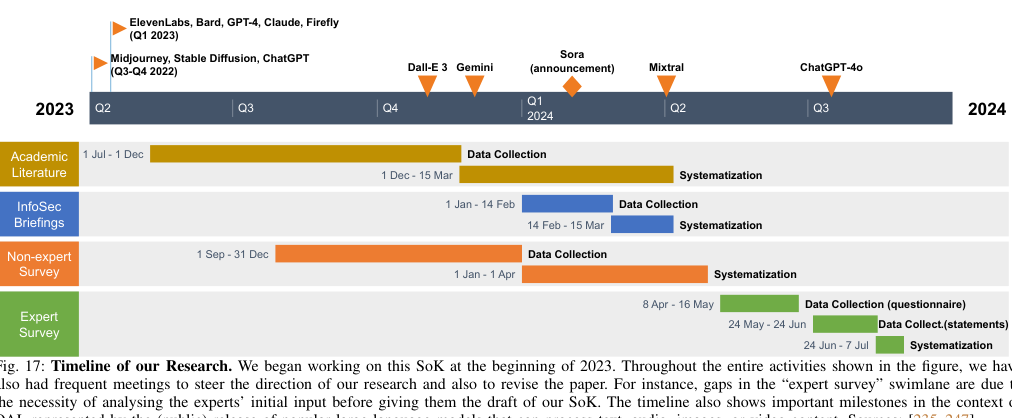

The SoK paper explores offensive AI risks, synthesizing academic, industry, expert, and layman insights from 95 research papers, 38 InfoSec briefings, and user studies. It identifies concerning AI offensive uses today, aiming to address this threat. Key contributions include a snapshot of the current landscape, a long-term technological classification, and diverse knowledge analysis. The paper develops an online tool for addressing offensive AI, focusing on an actionable research agenda for future work. It highlights gaps in prior literature, such as overlooking certain use-cases like attribute inference attacks. The study analyzes academic literature, industrial perspectives, and public perception, revealing concerns about AI's potential for malicious use. It surveys experts, identifying ten open problems and concerns in the field. The research examines 3,311 papers and 38 briefings on Offensive AI applications, focusing on AI security, cyber attacks, and privacy threats. It uses dual reviewing with adjudication to mitigate bias, identifying 95 unique works, 16 non-technical, and 79 technical papers. The analysis covers academic literature, non-academic sources, and content from renowned security events. The study also includes a questionnaire to gather non-expert opinions on OAI, ensuring a diverse respondent pool through various dissemination channels.

Advanced features