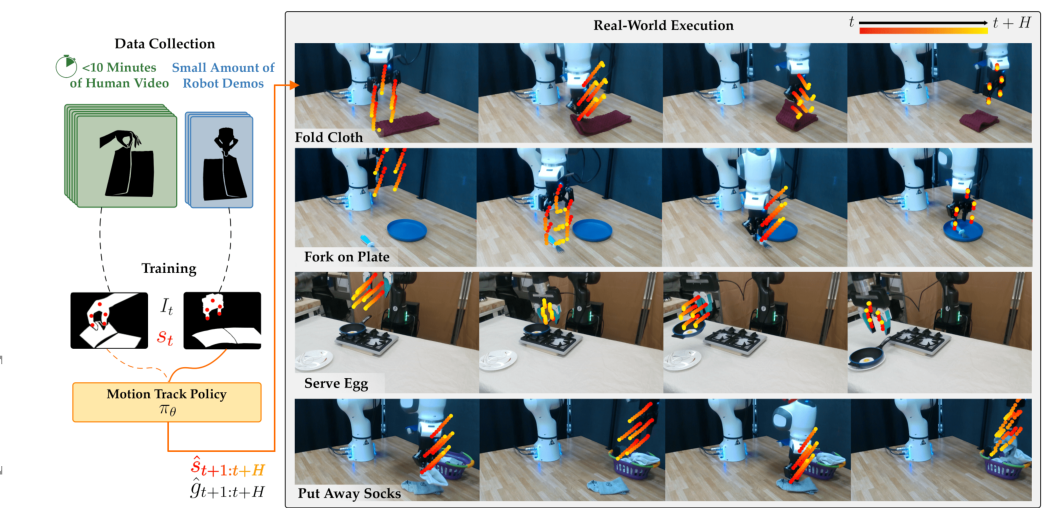

Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning

Summary

Paper digest

What problem does the paper attempt to solve? Is this a new problem?

The paper addresses the challenge of learning a visuomotor policy primarily from human video demonstrations while utilizing a limited amount of robot demonstration data. This approach aims to bridge the embodiment gap between human and robot actions, as traditional methods often struggle with the significant morphological differences between human and robot hands, which can hinder effective imitation learning .

This problem is not entirely new, as it builds upon existing research in imitation learning and human-robot interaction. However, the paper proposes a novel solution by introducing a unified action space that is compatible with both human and robot embodiments, allowing for better generalization and performance in robotic manipulation tasks .

What scientific hypothesis does this paper seek to validate?

The paper "Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning" seeks to validate the hypothesis that a unified action space, represented as 2D trajectories in the image plane, can effectively bridge the embodiment gap between human and robot actions. This approach aims to enhance the learning of visuomotor policies by leveraging mostly human video demonstrations alongside a smaller set of robot demonstrations, thereby improving generalization to novel scenarios and reducing the burden typically associated with imitation learning .

What new ideas, methods, or models does the paper propose? What are the characteristics and advantages compared to previous methods?

The paper "Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning" introduces several innovative ideas, methods, and models aimed at enhancing robotic manipulation through imitation learning. Below is a detailed analysis of the key contributions:

1. Motion Track Policy (MT-π)

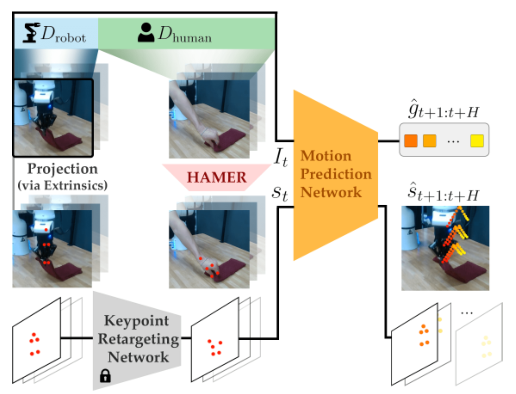

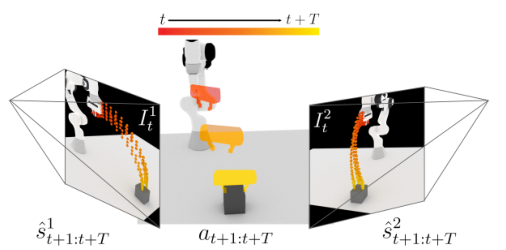

The core contribution of the paper is the Motion Track Policy (MT-π), a novel imitation learning algorithm that utilizes a cross-embodiment action space for robotic manipulation. This approach allows for the prediction of future movements of manipulators through 2D trajectory predictions on images, which can be applied to both human hands and robot end-effectors. This method simplifies the representation while enabling full recovery of 6DoF (degrees of freedom) positional and rotational robot actions through 3D reconstruction from 2D tracks captured from different views .

2. Unified Action Space

MT-π introduces a unified action space that aligns actions from human and robot embodiments. By representing actions as 2D trajectories in the image plane, the method disentangles motion differences from visual differences, facilitating better bridging of the embodiment gap. This allows the model to be trained on a hybrid dataset comprising primarily human videos and a smaller set of robot demonstrations, significantly reducing the data burden typically associated with imitation learning .

3. Sample Efficiency

The proposed method is designed to be sample-efficient, meaning it can achieve high performance with fewer robot demonstrations. The empirical results indicate that MT-π outperforms existing state-of-the-art imitation learning methods by an average of 40% across various real-world tasks. This efficiency is particularly beneficial as it relies heavily on easily accessible human video data, which can be collected quickly, while requiring only a limited number of robot demonstrations for effective training .

4. Handling of Human Video Data

The paper emphasizes the capability of MT-π to generalize to novel scenarios by leveraging human video data. The approach is built to handle the complexities of human interactions and motions, allowing the model to learn from diverse human demonstrations without needing extensive robot data. This is a significant advancement in the field, as it opens up possibilities for robots to learn from real-world human activities .

5. Future Directions

The authors express intentions to extend the capabilities of MT-π to handle more complex manipulation tasks and to integrate object-centric representations obtained from foundation models. This suggests a forward-looking approach that aims to enhance the model's applicability in real-world scenarios, particularly in environments where human interactions are unpredictable .

6. Challenges and Limitations

While the paper presents a robust framework, it also acknowledges challenges such as the sensitivity to noise in human video inputs and the difficulty in detecting human grasps accurately. The authors propose that future work could focus on improving hand perception and grasp detection, which are critical for the success of the proposed methods in practical applications .

In summary, the paper presents a comprehensive framework that not only advances the field of imitation learning but also addresses practical challenges in robotic manipulation by leveraging human video data effectively. The introduction of MT-π and the unified action space are pivotal contributions that could significantly impact future research and applications in robotics.

Characteristics of Motion Track Policy (MT-π)

-

Unified Action Space: MT-π introduces a unified action space that aligns actions from both human and robot embodiments. Actions are represented as 2D trajectories in the image plane, which allows for better bridging of the embodiment gap. This is a significant advancement over previous methods that often struggled with the morphological differences between human and robot hands, which could lead to ineffective retargeting of human motions to robots .

-

Sample Efficiency: The algorithm is designed to be sample-efficient, meaning it can achieve high performance with a limited number of robot demonstrations. MT-π primarily relies on easily accessible human video data, requiring only a small set of robot demonstrations for effective training. This drastically reduces the data burden typically associated with imitation learning (IL) .

-

Generalization to Novel Scenarios: MT-π is capable of generalizing to novel scenarios that are present only in human video data. This is particularly beneficial as it allows the model to learn from a broader diversity of motions captured in human demonstrations, which can be more varied than robot data .

-

2D Trajectory Predictions: The method forecasts future movements of manipulators through 2D trajectory predictions on images. This approach simplifies the representation while enabling full recovery of 6DoF positional and rotational robot actions via 3D reconstruction from corresponding sets of 2D tracks captured from different views .

-

Compatibility with Various Embodiments: MT-π is compatible with various embodiments, allowing it to be trained on a hybrid dataset that includes both human videos and teleoperated robot data. This flexibility is a notable improvement over previous methods that often required extensive robot data to perform well .

Advantages Compared to Previous Methods

-

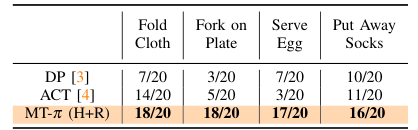

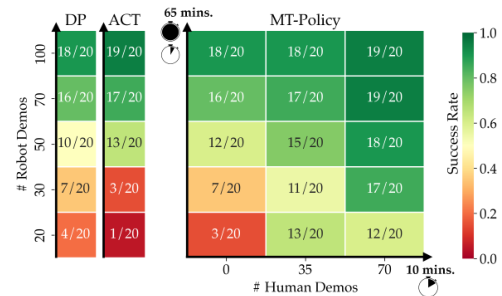

Improved Performance: Empirical results show that MT-π outperforms state-of-the-art IL methods that do not leverage human video data. For instance, in a comparison with Diffusion Policy (DP) and Action Chunking with Transformers (ACT), MT-π achieved significantly higher success rates across various real-world tasks, demonstrating its robustness and effectiveness in low-robot-data regimes .

-

Reduced Data Collection Burden: By relying primarily on human video data, MT-π alleviates the challenges associated with collecting large amounts of robot demonstration data. This is particularly valuable for tasks that are time-consuming to teleoperate, as human videos can be recorded quickly and easily .

-

Modularity and Future Integration: The framework is designed with modularity in mind, allowing for the incorporation of future advancements in hand perception and grasp detection. This adaptability positions MT-π as a forward-looking solution that can evolve with ongoing research in the field .

-

Handling of Diverse Motions: The ability to leverage human video demonstrations allows MT-π to capture a wider range of motions, which is crucial for tasks that require nuanced manipulation. Previous methods often struggled with generalization when faced with variations in starting states or task conditions .

-

Addressing Limitations of Previous Approaches: MT-π addresses the limitations of earlier methods that required significant on-policy robot interaction or goal images, which are not always available. By focusing on a hybrid approach that combines human video with minimal robot data, MT-π provides a more practical solution for real-world applications .

In summary, the Motion Track Policy (MT-π) presents a significant advancement in the field of imitation learning for robotic manipulation, characterized by its unified action space, sample efficiency, and ability to generalize from human video data. Its advantages over previous methods include improved performance, reduced data collection burdens, and adaptability for future enhancements.

Do any related researches exist? Who are the noteworthy researchers on this topic in this field?What is the key to the solution mentioned in the paper?

Related Researches and Noteworthy Researchers

The field of human-robot interaction and imitation learning has seen significant contributions from various researchers. Noteworthy researchers include:

- K. Goldberg: Known for work on language-embedded radiance fields for task-oriented grasping .

- D. Fox: Contributed to multi-task transformers for robotic manipulation and vision-language models .

- C. Finn: Involved in several studies on robot learning from demonstrations and visual representations for manipulation .

Key Solutions Mentioned in the Paper

The paper discusses several innovative approaches to enhance robotic manipulation through imitation learning. A key solution highlighted is the use of motion tracks as a unified representation for facilitating human-robot transfer in few-shot imitation learning. This approach aims to improve the efficiency and effectiveness of robots in learning from limited demonstrations, thereby enabling them to perform complex tasks with minimal prior knowledge .

Additionally, the integration of vision-language models and the development of open-source frameworks for robotic manipulation are emphasized as critical advancements in the field .

How were the experiments in the paper designed?

The experiments in the paper "Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning" were designed to evaluate the performance of the proposed method, MT-π, against baseline methods, specifically Diffusion Policy (DP) and Action Chunking with Transformers (ACT), across various real-world manipulation tasks.

Experimental Setup

-

Tasks: The experiments focused on four manipulation tasks: Fork on Plate, Fold Cloth, Serve Egg, and Put Away Socks. These tasks were chosen to assess the effectiveness of the MT-π method in real-world scenarios .

-

Data Collection: The training involved a combination of human video demonstrations and teleoperated robot demonstrations. The paper highlights that MT-π was trained using a small amount of robot data (25 trajectories) supplemented with approximately 10 minutes of human video, allowing it to leverage the diversity of human motions .

-

Performance Metrics: The success rates of the different methods were compared, with MT-π showing significant improvements over the baselines, particularly in scenarios with limited robot data. The experiments demonstrated that even a small amount of human video could enhance the performance of the policy, especially in longer-horizon tasks where collecting robot data is more time-consuming .

-

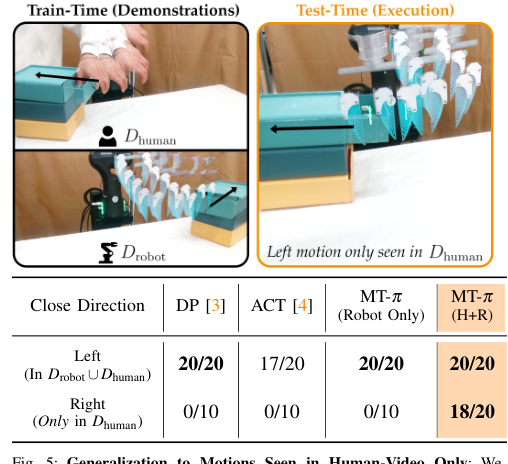

Generalization Tests: The experiments also included tests to evaluate the generalization capabilities of MT-π, particularly its ability to perform actions that were only present in human videos, such as closing a drawer in a direction not demonstrated by the robot .

Results and Findings

The results indicated that MT-π outperformed both DP and ACT in terms of success rates across all tasks, suggesting that the integration of human video demonstrations significantly contributes to more robust execution of tasks, especially when robot data is scarce .

Overall, the experimental design effectively showcased the advantages of the MT-π method in leveraging human demonstrations for improved robotic manipulation performance.

What is the dataset used for quantitative evaluation? Is the code open source?

The dataset used for quantitative evaluation in the study is not explicitly named in the provided context. However, it mentions that human video data is easier to collect at scale, with existing datasets providing thousands of hours of demonstrations . The research emphasizes the use of both human video demonstrations and teleoperated robot data to train the imitation learning policy effectively .

Regarding the code, it is stated that the Motion Track Policy (MT-π) is open-sourced for reproducible training and deployment in the real world . You can find more information and access the code on their website .

Do the experiments and results in the paper provide good support for the scientific hypotheses that need to be verified? Please analyze.

The experiments and results presented in the paper "Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning" provide substantial support for the scientific hypotheses being tested. Here are the key points of analysis:

1. Performance Comparison

The paper compares the proposed method, MT-π, against established baselines such as Diffusion Policy (DP) and Action Chunking with Transformers (ACT) across various real-world manipulation tasks. The results indicate that MT-π significantly outperforms these baselines, particularly in scenarios with limited robot data, suggesting that leveraging human video demonstrations enhances the robustness of the policy . This supports the hypothesis that human video can provide valuable information that improves robot learning.

2. Sample Efficiency

The findings demonstrate that MT-π achieves comparable success rates to DP and ACT while utilizing fewer robot demonstrations, specifically by incorporating a small amount of human video data. This suggests that the action representation in image space is effective and sample-efficient, validating the hypothesis that human video can enhance learning efficiency in robotic tasks .

3. Generalization Capability

The ability of MT-π to generalize to motions and objects that are only present in human video data is another critical aspect. The experiments show that MT-π can successfully perform tasks (e.g., closing a drawer in both directions) that were not demonstrated in the robot data, indicating that the method can transfer learned motions from human demonstrations to the robot effectively . This supports the hypothesis regarding the potential for positive transfer of human motion data to robotic applications.

4. Limitations and Future Work

While the results are promising, the paper also acknowledges limitations, such as the current inability to enforce consistency across different viewpoints when making predictions. This recognition of limitations is crucial for scientific rigor, as it highlights areas for future research and improvement .

Conclusion

Overall, the experiments and results in the paper provide strong empirical support for the hypotheses regarding the benefits of integrating human video into robotic learning frameworks. The demonstrated improvements in performance, sample efficiency, and generalization capabilities substantiate the proposed approach's effectiveness in enhancing human-robot interaction and learning.

What are the contributions of this paper?

The paper "Motion Tracks: A Unified Representation for Human-Robot Transfer in Few-Shot Imitation Learning" presents several key contributions:

-

Unified Action Space: The authors introduce a unified action space that is compatible with both human and robot embodiments. This allows actions to be represented as 2D trajectories in the image plane, effectively bridging the embodiment gap between human and robot actions .

-

Visuomotor Policy Learning: The work aims to learn a visuomotor policy primarily trained on human video data, supplemented by a small amount of robot demonstrations. This approach leverages the abundance of easily collected human demonstrations while minimizing the need for extensive robot interaction .

-

Disentangling Motion and Visual Differences: By aligning actions in a shared space, the authors disentangle motion differences from visual differences. This facilitates better training on a hybrid dataset that includes both human videos and teleoperated robot data, enhancing the generalization capabilities of the learned policies .

-

Handling Morphological Differences: The paper addresses the challenges posed by the significant morphological differences between human and robot hands, proposing methods to retarget human hand poses to robot end-effectors effectively .

These contributions collectively advance the field of imitation learning and robotic manipulation, particularly in scenarios where human demonstrations are more readily available than robot data.

What work can be continued in depth?

Future work can focus on several key areas to enhance the capabilities of imitation learning (IL) and robotic manipulation:

-

Handling In-the-Wild Human Videos: There is a need to extend the Motion Track Policy (MT-π) to effectively manage real-world human videos that may feature drastic viewpoint shifts, egocentric motion, or rapid temporal changes. This would improve the robustness of the system in diverse environments .

-

Improving Hand Perception: The current approach relies on heuristic methods for detecting human grasps, which can lead to imprecise actions. Future research could focus on integrating advanced hand perception technologies to enhance the accuracy of grasp detection and improve overall performance .

-

Viewpoint Consistency: Implementing auxiliary projection/deprojection losses could help enforce consistency between tracks across different viewpoints, reducing triangulation errors and improving the precision of actions .

-

Combining Motion-Centric and Object-Centric Representations: Future work could explore the integration of motion-centric approaches with object-centric representations obtained from foundation models, which may lead to better performance in complex manipulation tasks .

-

Generalization to Novel Scenarios: Enhancing the generalization capabilities of the model to adapt to new tasks and environments that are not present in the training data is crucial for practical applications .

By addressing these areas, researchers can significantly advance the field of robotic manipulation and imitation learning, making systems more efficient and capable in real-world applications.