Large Language Models Think Too Fast To Explore Effectively

Lan Pan, Hanbo Xie, Robert C. Wilson·January 29, 2025

Summary

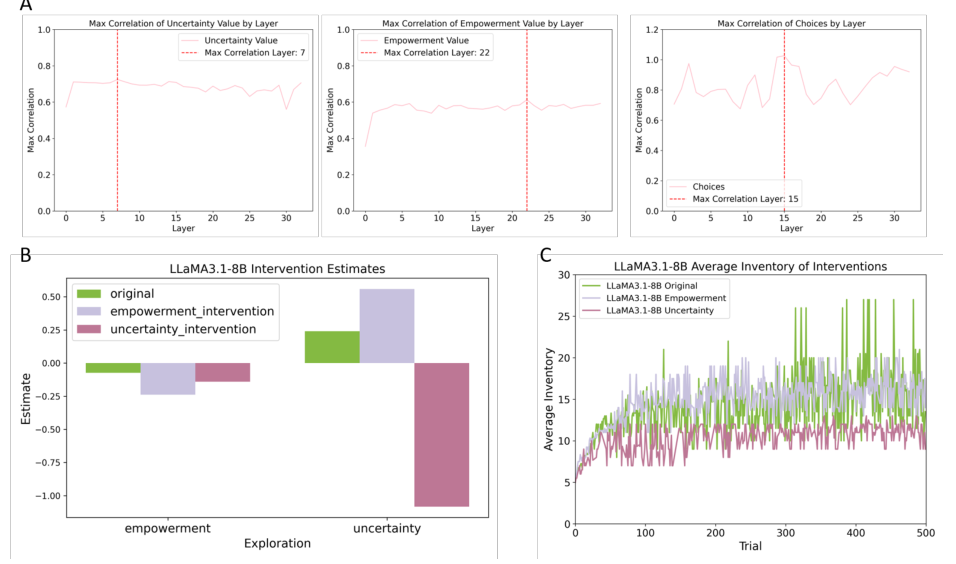

Large Language Models (LLMs) often excel in cognitive tasks but struggle with exploration, essential for discovering new information. A study using Little Alchemy 2 found that most LLMs underperformed compared to humans, except for the o1 model. Traditional LLMs relied on uncertainty-driven strategies, unlike humans who balanced uncertainty and empowerment. Analysis revealed LLMs think too fast, making premature decisions, due to how they process uncertainty and empowerment values. This highlights LLMs' limitations in exploration and suggests areas for improvement in their adaptability.

Introduction

Background

Overview of Large Language Models (LLMs)

Importance of exploration in cognitive tasks

Objective

To compare the performance of LLMs in exploration tasks with human performance

To analyze the strategies employed by LLMs in comparison to humans

Method

Data Collection

Selection of LLMs for the study

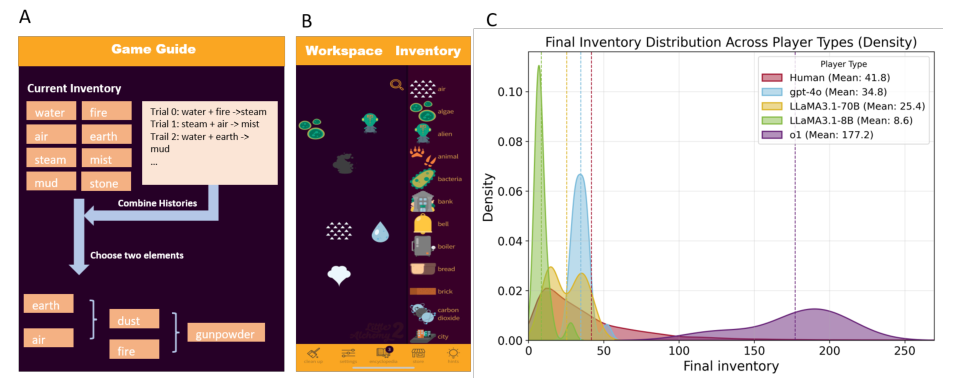

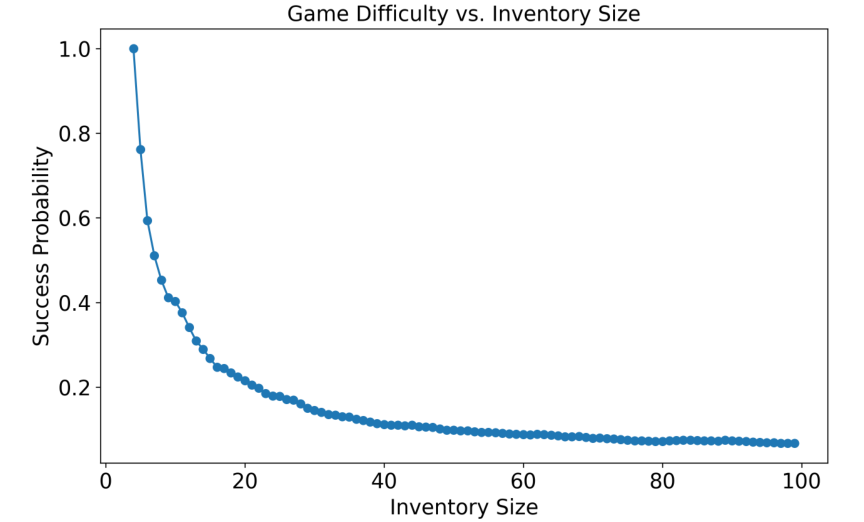

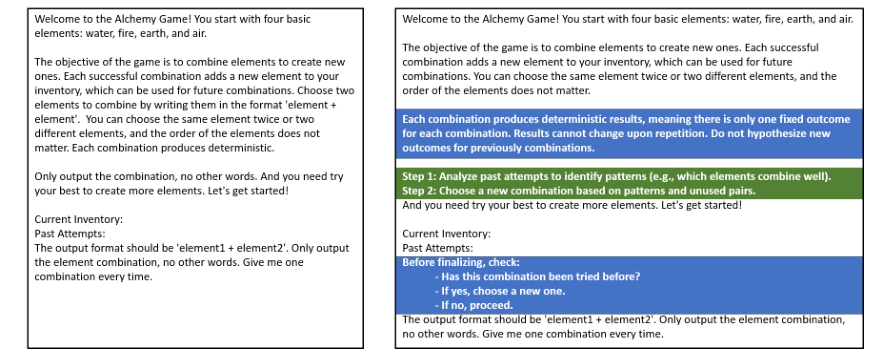

Description of the exploration task (Little Alchemy 2)

Data Preprocessing

Preparation of data for analysis

Standardization of LLM responses

Results

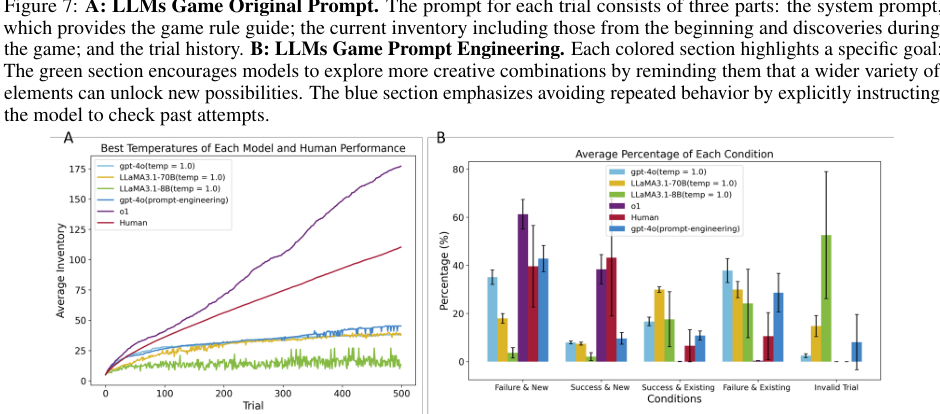

Performance Comparison

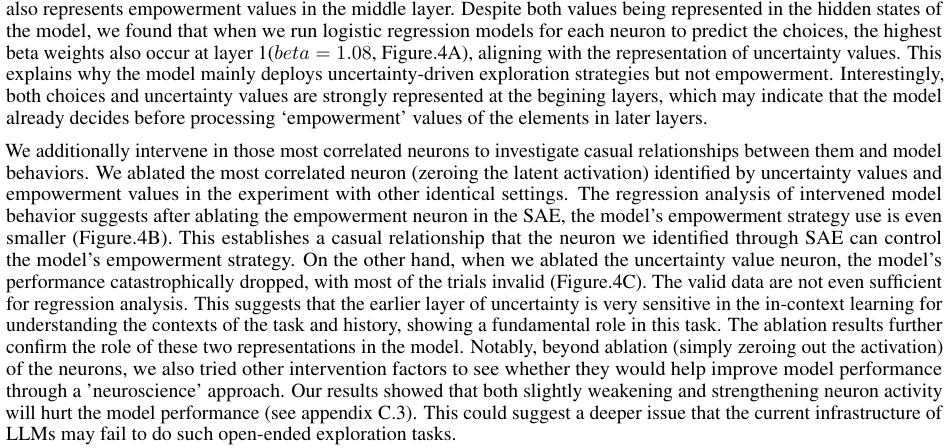

Quantitative analysis of LLM performance

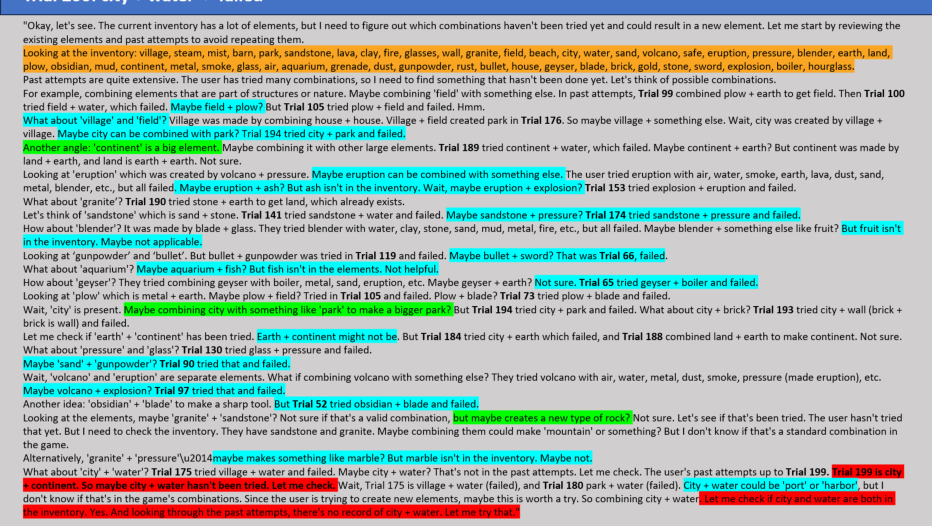

Qualitative insights into LLM decision-making processes

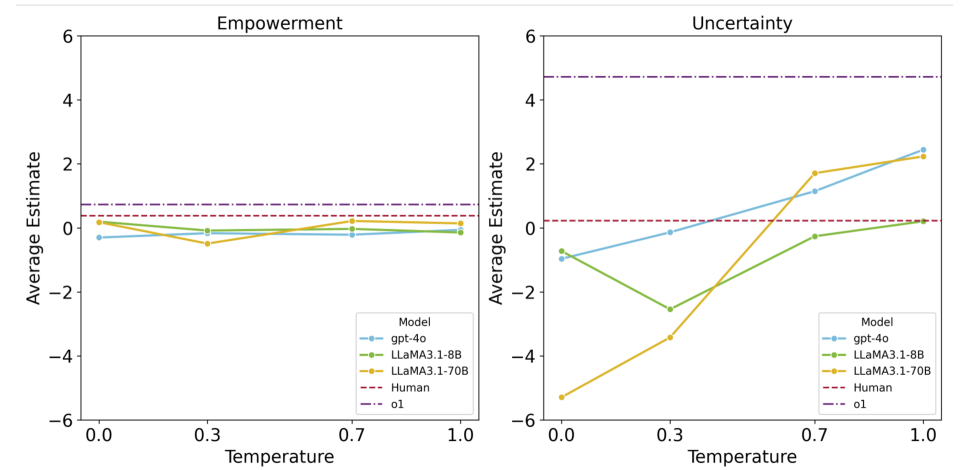

Human vs. LLM Strategies

Examination of human strategies in comparison to LLM approaches

Analysis

LLM Decision-Making Processes

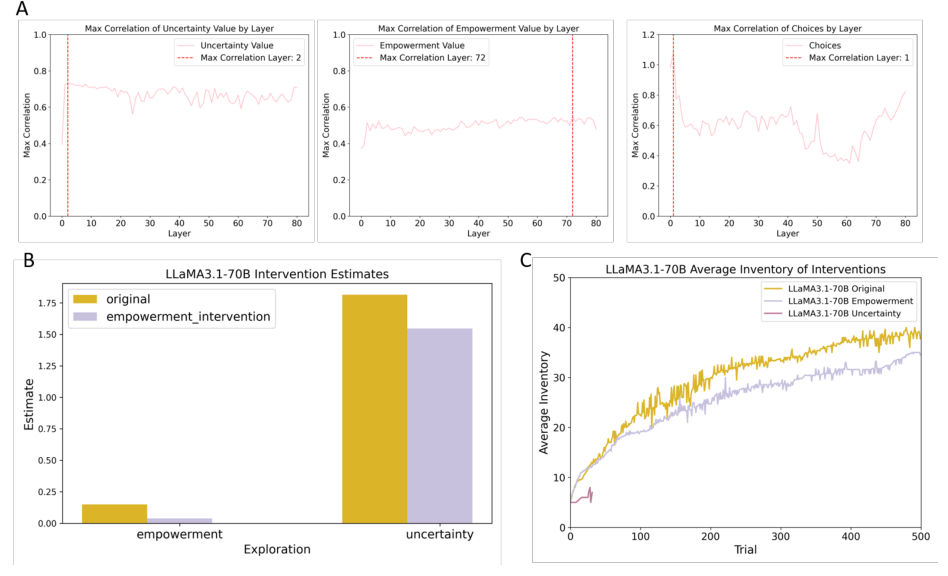

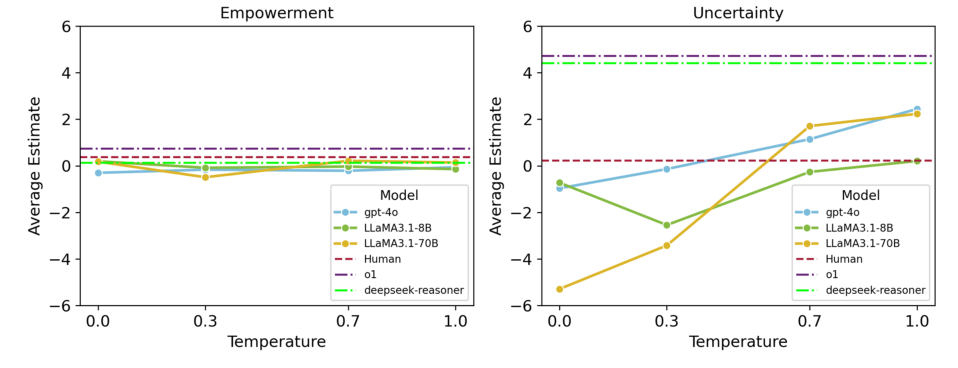

Examination of how LLMs process uncertainty and empowerment

Identification of the "thinking too fast" phenomenon

Limitations in Exploration

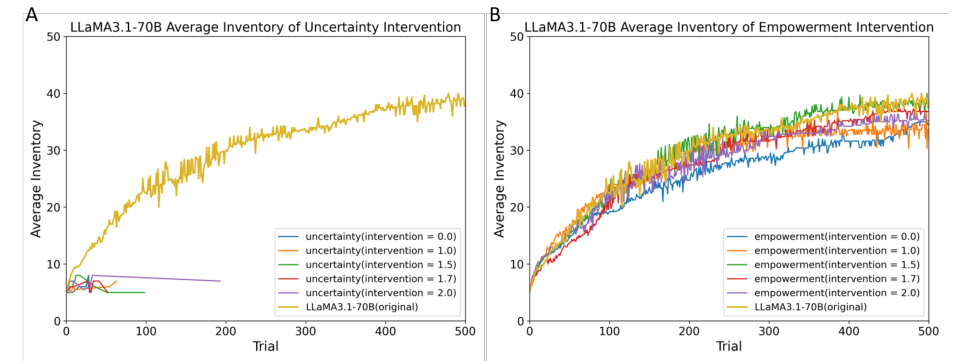

Discussion of LLMs' limitations in exploration tasks

Analysis of the underlying reasons for these limitations

Discussion

Implications for LLM Adaptability

Importance of adaptability in LLMs for real-world applications

Potential improvements in LLM design for better exploration capabilities

Future Directions

Suggestions for future research in enhancing LLM exploration

Considerations for integrating human-like decision-making processes

Conclusion

Summary of Findings

Recap of the study's main findings

Implications for the Field

Impact of the study on the development of LLMs

Potential for advancing AI in exploration and discovery tasks

Basic info

papers

neurons and cognition

artificial intelligence

Advanced features

Insights

What cognitive tasks do Large Language Models (LLMs) typically excel at?

Why did traditional LLMs rely on uncertainty-driven strategies instead of balancing uncertainty and empowerment?

Which study found that most LLMs underperformed compared to humans in discovering new information?

What limitation in exploration does the analysis of LLMs highlight, and how does it relate to their processing of uncertainty and empowerment values?