Effective and Efficient Mixed Precision Quantization of Speech Foundation Models

Haoning Xu, Zhaoqing Li, Zengrui Jin, Huimeng Wang, Youjun Chen, Guinan Li, Mengzhe Geng, Shujie Hu, Jiajun Deng, Xunying Liu·January 07, 2025

Summary

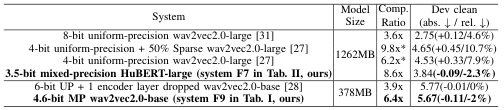

The paper presents a novel mixed-precision quantization method for speech foundation models, integrating learning and quantization in one stage. This approach enhances lossless compression ratios by up to 1.9x and 1.7x for specific models compared to uniform-precision and two-stage quantized baselines. It reduces system compression time by up to 1.9x and 1.5x while maintaining or improving word error rates. The best-performing 3.5-bit mixed-precision model achieves an 8.6x lossless compression ratio over full-precision systems.

Introduction

Background

Overview of speech foundation models

Importance of quantization in model deployment

Objective

Aim of the novel mixed-precision quantization method

Expected improvements in lossless compression ratios and system compression time

Method

Data Collection

Description of the dataset used for model training and evaluation

Data Preprocessing

Techniques applied to prepare the data for the mixed-precision quantization process

Quantization Strategy

Explanation of the integration of learning and quantization in one stage

Description of the mixed-precision approach

Model Training

Overview of the training process for the mixed-precision models

Evaluation Metrics

Criteria used to assess the performance of the models

Comparison with uniform-precision and two-stage quantized baselines

Results

Compression Ratios

Detailed comparison of lossless compression ratios for specific models

Quantification of improvements over uniform-precision and two-stage quantized baselines

Compression Time

Analysis of system compression time reductions

Comparison with uniform-precision and two-stage quantized baselines

Word Error Rates

Evaluation of the impact on word error rates

Discussion on maintaining or improving performance

Performance Analysis

Best-Performing Model

Characteristics of the 3.5-bit mixed-precision model

Detailed performance metrics and comparisons

General Observations

Summary of the method's effectiveness across different models

Insights into the trade-offs between compression and model performance

Conclusion

Summary of Contributions

Recap of the method's innovations and improvements

Future Work

Potential areas for further research and development

Implications

Discussion on the broader impact of the mixed-precision quantization method in speech foundation models

Basic info

papers

sound

audio and speech processing

artificial intelligence

Advanced features

Insights

What are the time-saving benefits of the method mentioned in the input?

How does the proposed mixed-precision quantization method improve lossless compression ratios for speech foundation models?

What is the main focus of the paper discussed in the input?

5-bit mixed-precision model in terms of lossless compression ratio compared to full-precision systems?