Deriving Coding-Specific Sub-Models from LLMs using Resource-Efficient Pruning

Laura Puccioni, Alireza Farshin, Mariano Scazzariello, Changjie Wang, Marco Chiesa, Dejan Kostic·January 09, 2025

Summary

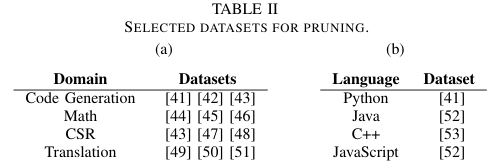

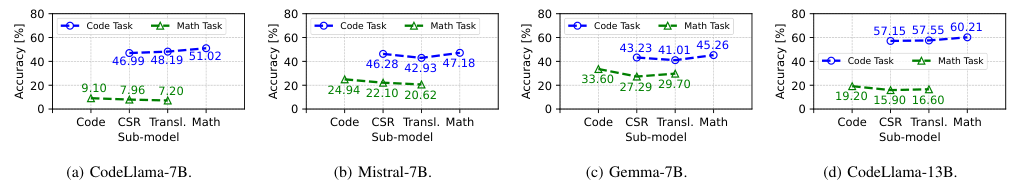

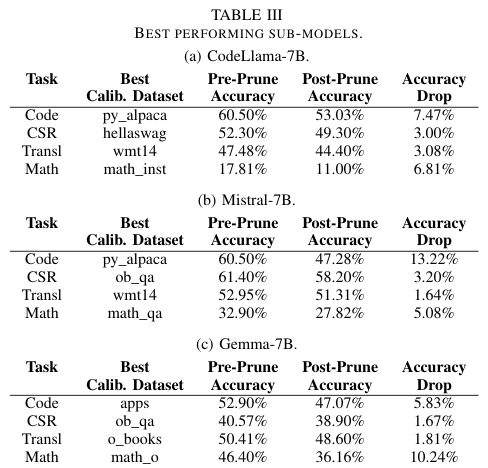

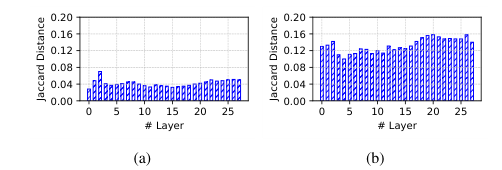

Efficient extraction of coding-specific sub-models from large language models (LLMs) through unstructured pruning is explored, focusing on Python, Java, C++, and JavaScript. This approach aims to reduce computational demands, enabling local execution on consumer-grade hardware and supporting faster inference times for real-time development feedback. Analytical evidence supports the creation of specialized sub-models through unstructured pruning, enhancing LLM accessibility for coding tasks. The study demonstrates that domain-specific sub-models outperform those derived from unrelated domains in specialized tasks, activating different regions within LLMs. The impact includes compact models reducing computational demands, ensuring data security, faster inference times, independent model maintenance, and flexibility in customization for project-specific needs. The research validates the effectiveness of resource-efficient pruning techniques, specifically Wanda, for extracting domain-specific sub-models in programming languages without retraining. The study introduces a methodology combining efficient pruning with task-specific calibration datasets to generate domain-specific LLMs for specialized tasks, aiming to maintain strong performance with minimized memory and computational demands. The approach is validated through sub-model extraction in domains such as math, common-sense reasoning, language translation, and code generation for languages like Python, Java, C++, and JavaScript.

Advanced features