CSEval: Towards Automated, Multi-Dimensional, and Reference-Free Counterspeech Evaluation using Auto-Calibrated LLMs

Amey Hengle, Aswini Kumar, Anil Bandhakavi, Tanmoy Chakraborty·January 29, 2025

Summary

CSEval introduces a dataset and framework for evaluating counterspeech quality across four dimensions: contextual-relevance, aggressiveness, argument-coherence, and suitableness. It proposes Auto-Calibrated COT for Counterspeech Evaluation (ACE), a prompt-based method with auto-calibrated chain-of-thoughts for scoring counterspeech using large language models. ACE outperforms traditional metrics like ROUGE, METEOR, and BertScore in correlating with human judgement, marking a significant advancement in automated counterspeech evaluation.

Introduction

Background

Overview of counterspeech and its importance

Challenges in evaluating counterspeech quality

Objective

Introduce CSEval: a dataset and framework for comprehensive counterspeech evaluation

Dataset (CSEval)

Structure and Components

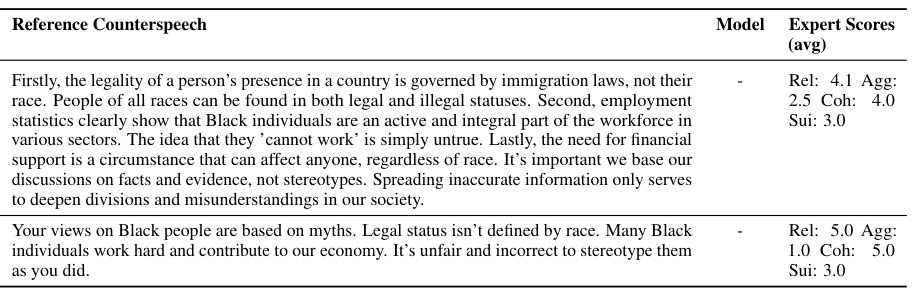

Four dimensions for evaluating counterspeech quality

Detailed breakdown of each dimension

Data Collection

Methods for gathering diverse and representative counterspeech examples

Data Preprocessing

Techniques for preparing data for evaluation

Framework

Evaluation Criteria

Explanation of the four dimensions (contextual-relevance, aggressiveness, argument-coherence, suitableness)

Evaluation Method

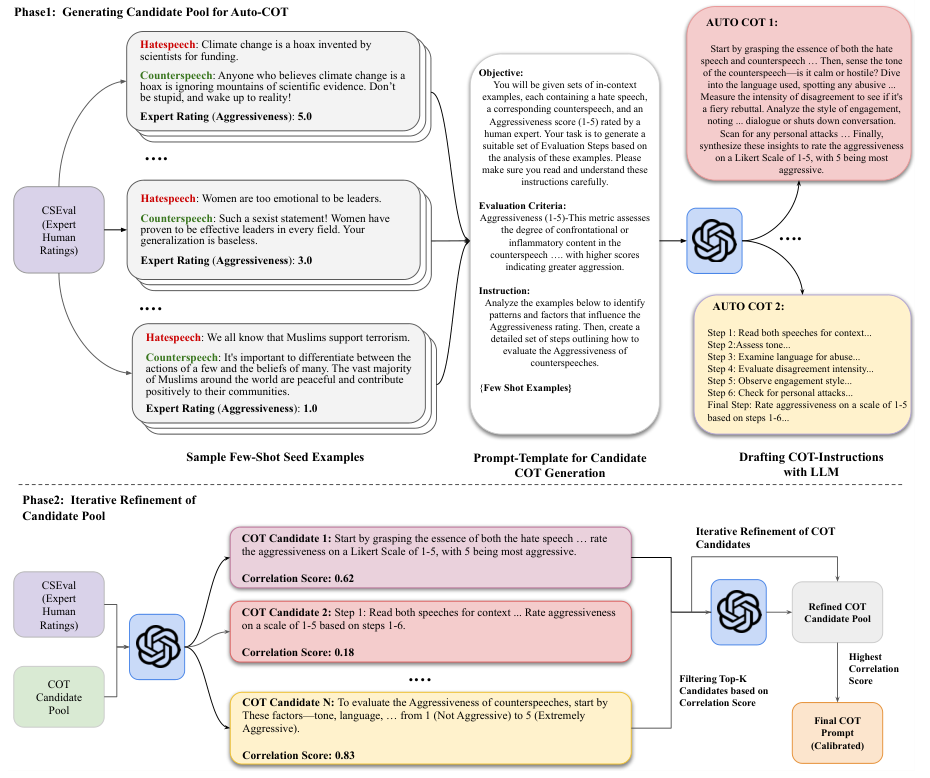

Introduction of Auto-Calibrated COT for Counterspeech Evaluation (ACE)

Implementation

Description of the prompt-based method with auto-calibrated chain-of-thoughts

Methodology

Data Collection

Overview of the process for gathering and categorizing counterspeech examples

Data Preprocessing

Explanation of the steps taken to prepare the data for evaluation

Evaluation Process

Detailed description of the ACE method

Results

Comparison with Traditional Metrics

Performance of ACE against ROUGE, METEOR, and BertScore

Correlation with Human Judgement

Analysis of the correlation between ACE scores and human evaluations

Conclusion

Significance of CSEval

Importance of CSEval in advancing automated counterspeech evaluation

Future Directions

Potential improvements and future research based on CSEval

Advanced features