AI Guide Dog: Egocentric Path Prediction on Smartphone

Summary

Paper digest

What problem does the paper attempt to solve? Is this a new problem?

The paper addresses the challenges faced by visually impaired individuals in navigation, specifically focusing on the development of a lightweight egocentric navigation assistance system called AI Guide Dog (AIGD). This system aims to provide real-time navigation support using only a smartphone camera, predicting directional commands to facilitate safe traversal across various environments .

This problem is not entirely new, as navigation assistance systems for the visually impaired have been researched for several decades. However, AIGD introduces a novel approach by integrating both goal-oriented and exploratory navigation capabilities, which distinguishes it from previous systems that typically focused on either indoor or outdoor navigation without accommodating the complexities of real-world scenarios . Thus, while the overarching issue of navigation for the visually impaired has been explored, the specific solution proposed by AIGD represents a significant advancement in the field.

What scientific hypothesis does this paper seek to validate?

The paper "AI Guide Dog: Egocentric Path Prediction on Smartphone" seeks to validate the hypothesis that a lightweight, vision-only navigation assistance system can effectively predict directional commands for visually impaired individuals, facilitating safe traversal across diverse environments. This system employs a multi-label classification approach to address key challenges in blind navigation, including goal-based outdoor navigation and uncertain multi-path predictions for destination-free indoor navigation . The research aims to establish a new state-of-the-art in assistive navigation technologies by demonstrating the practicality and effectiveness of using a smartphone camera for real-time navigation assistance .

What new ideas, methods, or models does the paper propose? What are the characteristics and advantages compared to previous methods?

The paper "AI Guide Dog: Egocentric Path Prediction on Smartphone" introduces several innovative ideas, methods, and models aimed at enhancing navigation assistance for visually impaired individuals. Below is a detailed analysis of these contributions:

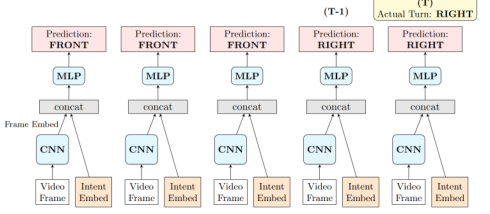

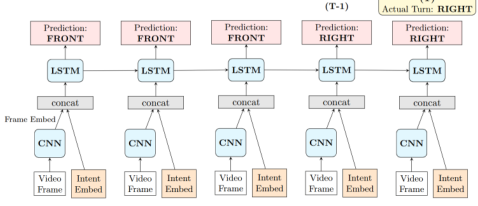

1. Lightweight Egocentric Navigation System

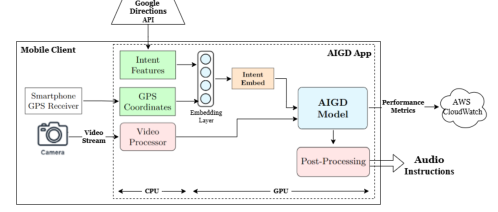

The AI Guide Dog (AIGD) is designed as a lightweight navigation assistance system that operates in real-time on smartphones. This approach addresses the challenges of blind navigation by utilizing a vision-only, multi-label classification method to predict directional commands, ensuring safe traversal across various environments .

2. Multi-Modal Fusion for Navigation

The system integrates video input from the smartphone camera with optional GPS data to facilitate destination-based navigation. This combination allows for effective goal-based outdoor navigation while also addressing the complexities of indoor navigation without a specific destination .

3. Novel Intent-Conditioning Technique

AIGD employs a novel intent-conditioning technique that enhances the system's ability to handle goal-based navigation in outdoor settings and manage directional uncertainty in exploratory scenarios. This method balances simplicity and performance, enabling the model to generalize across diverse environments while remaining practical for real-time deployment .

4. Multi-Label Classification Framework

The paper proposes a multi-label classification framework that simplifies the navigation task into an egocentric path prediction problem. This framework predicts all possible future navigation actions, such as continuing straight, turning left, or turning right, which avoids the uncertainties associated with precise trajectory predictions .

5. Focus on User-Centric Design

The design of AIGD is motivated by the unique requirements of visually impaired users, allowing the system to scope the problem to a limited set of instruction classes. This user-centric approach incorporates goal-based navigation and directional uncertainty without relying on complex dynamic planning or reinforcement learning, making it more accessible and practical for users .

6. Evaluation Metrics and Performance Analysis

The paper details the evaluation metrics used to assess the model's performance, including accuracy, AUC, precision, recall, and F1 score. The results indicate that the system effectively predicts navigation directions, with a particular emphasis on turn classes, which are crucial for user navigation .

7. Addressing Limitations of Existing Systems

AIGD aims to overcome the limitations of existing navigation systems that often rely on expensive, custom-built devices. By leveraging only a smartphone camera, the system provides a more accessible solution for visually impaired individuals, facilitating easier user adoption while maintaining robust performance .

Conclusion

In summary, the paper presents a comprehensive approach to assistive navigation for visually impaired individuals through the development of the AI Guide Dog system. By integrating innovative methods such as multi-modal fusion, a multi-label classification framework, and a focus on user-centric design, AIGD sets a new standard in blind navigation technology, encouraging further advancements in this field .

Characteristics of AI Guide Dog (AIGD)

-

Lightweight and Accessible Design

- AIGD operates as a smartphone application, utilizing only the device's camera for video input, which makes it more accessible compared to previous systems that often required bulky and expensive hardware . This design choice facilitates easy user adoption and ensures that visually impaired individuals can use a device they already own.

-

Real-Time Egocentric Path Prediction

- The system employs a real-time prediction model that processes video input to predict navigation instructions. This contrasts with earlier methods that focused on precise 3D trajectory forecasting, which may not align with the navigation needs of blind users . AIGD simplifies the navigation task into predicting possible user actions (e.g., turning left or right) rather than exact locations, making it more practical for real-world use .

-

Multi-Label Classification Framework

- AIGD utilizes a multi-label classification approach to accommodate various navigation directions, allowing the system to provide clear and actionable commands. This is a significant improvement over previous methods that often focused on single-label predictions, which could lead to ambiguity in navigation instructions .

-

Intent-Conditioning Technique

- The introduction of a novel intent-conditioning technique allows AIGD to handle both goal-based navigation and exploratory scenarios effectively. This method enhances the system's ability to manage directional uncertainty without relying on complex dynamic planning or reinforcement learning, which are often unsuitable for modeling human behavior, especially for blind users .

-

Integration of GPS and Scene Semantics

- AIGD incorporates GPS data and scene semantics to enhance navigation performance, particularly in outdoor environments. This integration provides explicit directional cues that help disambiguate turns, which is a notable advancement over previous systems that lacked such capabilities .

Advantages Compared to Previous Methods

-

User-Centric Approach

- AIGD is specifically designed to meet the unique needs of visually impaired users, focusing on practical navigation solutions rather than complex algorithms that may not be user-friendly. This user-centric design is a significant advantage over prior systems that often did not consider the specific challenges faced by blind individuals .

-

Improved Performance in Diverse Environments

- The system has been evaluated on both indoor and outdoor datasets, demonstrating robust performance across different scenarios. The ability to generalize navigation across diverse environments is a key advantage over previous methods that may have been limited to specific contexts .

-

Enhanced Real-Time Processing

- AIGD's lightweight model allows for real-time processing at a frame rate of 2 FPS, which is sufficient for reliable navigation. This efficiency contrasts with earlier systems that may have struggled with latency issues, making AIGD more suitable for real-world applications where timely responses are critical .

-

Focus on Directional Classes

- The system emphasizes the prediction of turn classes (LEFT, RIGHT) which are crucial for navigation. This focus allows for better performance in scenarios where precise trajectory predictions are less relevant, addressing a common limitation in previous navigation systems that often aimed for overly complex predictions .

-

Flexibility for Future Enhancements

- AIGD's architecture allows for easy extension to support more nuanced navigation instructions, such as turning angles and start/stop commands. This flexibility positions AIGD as a forward-looking solution that can adapt to future advancements in technology and user needs .

Conclusion

In summary, the AI Guide Dog system presents a significant advancement in assistive navigation technologies for visually impaired individuals. Its lightweight design, real-time processing capabilities, user-centric approach, and innovative techniques such as intent-conditioning and multi-label classification collectively enhance its effectiveness compared to previous methods. These characteristics not only improve navigation performance but also ensure that the system is practical and accessible for users in diverse environments.

Do any related researches exist? Who are the noteworthy researchers on this topic in this field?What is the key to the solution mentioned in the paper?

Related Researches

Yes, there are several related researches in the field of navigation assistance systems for visually impaired individuals. Notable studies include:

- NavCog3: An evaluation of a smartphone-based blind indoor navigation assistant with semantic features .

- Wearable Obstacle Avoidance Systems: A survey of electronic travel aids for the blind .

- Indoor Future Person Localization: Research focusing on localization from an egocentric wearable camera .

Noteworthy Researchers

Several researchers have made significant contributions to this field, including:

- Qiu, J.: Known for work on egocentric human trajectory forecasting and indoor localization .

- Dakopoulos, D.: Recognized for surveying wearable obstacle avoidance systems .

- Hesch, J. A.: Contributed to the design and analysis of portable indoor localization aids .

Key to the Solution

The key to the solution mentioned in the paper is the development of the AI Guide Dog (AIGD) system, which utilizes a lightweight, vision-only, multi-label classification approach to predict directional commands. This system is designed for real-time deployment on smartphones, addressing challenges in blind navigation by integrating GPS signals for goal-based outdoor navigation and handling uncertain multi-path predictions for indoor navigation . This innovative approach aims to enhance user safety and convenience while ensuring robust performance across diverse environments .

How were the experiments in the paper designed?

The experiments in the paper were designed with a focus on addressing label imbalance and evaluating model performance in predicting turns for navigation. Here are the key aspects of the experimental design:

Label Imbalance

To tackle the significant skew towards the FRONT label in the dataset, the authors implemented several strategies during training:

- Minority Oversampling: They doubled the examples for the LEFT and RIGHT classes to balance the dataset.

- Data Augmentation: Various transformations were applied with a 20% probability to enhance the dataset's diversity .

- Loss Function Adjustments: They employed Focal Loss to emphasize harder-to-classify samples, particularly for the rarer turn prediction cases. Additionally, they experimented with Weighted BCE Loss using class weights of 2:2:1 (LEFT:RIGHT:FRONT) to improve performance .

Experimental Setup

The models were fine-tuned using the label balancing settings described above. The best available public checkpoints were used to initialize the CNN and ConvLSTM components for both no-intent and intent-based models, as well as PredRNN. Training was conducted for 30 epochs with a batch size of 64, utilizing the Adam optimizer and a learning rate scheduler .

Evaluation Metrics

The models were evaluated using various metrics, including accuracy, AUC, Precision, Recall, and F1 score, to assess their performance on both indoor and outdoor datasets .

Data Collection

A custom dataset was collected using smartphone cameras to reflect real-world conditions, capturing the walking speeds and styles of visually impaired individuals. The dataset included diverse indoor and outdoor scenes, with a total of 57 hours of walking data captured at 30 fps, ensuring a comprehensive evaluation of the models .

Performance Analysis

The results were analyzed to compare the performance of different models, particularly focusing on the effectiveness of intent features and GPS signals in enhancing model predictions for outdoor scenarios, while also noting the performance on indoor datasets .

This structured approach allowed the researchers to systematically evaluate the impact of various modifications and model architectures on the task of egocentric path prediction.

What is the dataset used for quantitative evaluation? Is the code open source?

The dataset used for quantitative evaluation in the study consists of a custom collection that includes both indoor and outdoor scenes, totaling 57 hours of walking data captured at 30 frames per second. This dataset encompasses video streams from smartphone cameras along with sensor data (accelerometer, gyrometer, magnetometer, pedometer) recorded at 0.1-second intervals. It was specifically designed to reflect real-world conditions for visually impaired individuals, capturing various walking speeds and styles across diverse environments such as grocery stores, libraries, and city streets .

Regarding the code, the document does not explicitly state whether the code is open source. Therefore, additional information would be needed to confirm the availability of the code for public use.

Do the experiments and results in the paper provide good support for the scientific hypotheses that need to be verified? Please analyze.

The experiments and results presented in the paper provide substantial support for the scientific hypotheses that need to be verified, particularly regarding the effectiveness of intent-based models in outdoor scenarios and the performance of no-intent models in indoor environments.

Support for Hypotheses:

-

Intent-Based Models in Outdoor Scenarios: The paper demonstrates that incorporating intent features and GPS signals significantly enhances the performance of CNN and ConvLSTM models in outdoor settings. This is evidenced by the performance metrics reported in Table 4, which show pronounced gains for outdoor test videos when intent and GPS signals are utilized . The results indicate that these features provide explicit directional cues that help disambiguate turns, supporting the hypothesis that intent-based models are better suited for outdoor navigation.

-

No-Intent Models in Indoor Scenarios: The findings also support the hypothesis that no-intent models perform better in indoor environments. The paper notes that these models, trained exclusively on indoor data, outperform those trained on mixed datasets when evaluated on the indoor test set . This is attributed to the cleaner training data available indoors, which allows the models to effectively capture aisle and turn patterns, thus validating the hypothesis regarding their suitability for indoor navigation.

-

Evaluation Metrics: The use of multiple evaluation metrics, including accuracy, AUC, Precision, Recall, and F1 score, provides a comprehensive assessment of model performance across different scenarios . The detailed breakdown of results in Tables 3 and 5 further supports the claims made about the models' effectiveness, particularly in addressing the challenges of turn prediction and navigation.

-

Ablation Studies: The ablation studies conducted in the paper reinforce the hypotheses by isolating the effects of training data configurations on model performance. The results indicate that while no-intent models excel in indoor settings, intent-based models benefit from a combination of indoor and outdoor training data, leading to improved overall performance . This nuanced analysis strengthens the argument for the proposed model architectures and their respective applications.

In conclusion, the experiments and results in the paper provide robust support for the scientific hypotheses, demonstrating the effectiveness of different model configurations in varying environments and addressing key challenges in assistive navigation technologies. The comprehensive evaluation and analysis presented contribute to the validity of the claims made in the research.

What are the contributions of this paper?

The paper titled "AI Guide Dog: Egocentric Path Prediction on Smartphone" presents several key contributions to the field of navigation assistance for visually impaired individuals:

-

Development of AIGD: The paper introduces the AI Guide Dog (AIGD), a lightweight egocentric navigation assistance system designed for real-time deployment on smartphones. This system addresses significant challenges in blind navigation by utilizing a vision-only, multi-label classification approach to predict directional commands, ensuring safe traversal across diverse environments .

-

Goal-Based Outdoor Navigation: A novel technique is proposed for enabling goal-based outdoor navigation by integrating GPS signals and high-level directions. This approach effectively addresses uncertain multi-path predictions, facilitating destination-free indoor navigation .

-

Generalized Model: The AIGD model is the first of its kind to handle both goal-oriented and exploratory navigation scenarios across indoor and outdoor settings. This establishes a new state-of-the-art in assistive navigation systems for the visually impaired .

-

Encouragement of Further Innovations: The paper presents methods, datasets, evaluations, and deployment insights aimed at encouraging further innovations in assistive navigation technologies, thereby contributing to the advancement of the field .

These contributions highlight the paper's focus on improving navigation systems for visually impaired individuals through innovative technology and methodologies.

What work can be continued in depth?

Future research could explore several avenues to enhance the capabilities of assistive navigation technologies, particularly in the context of the AI Guide Dog (AIGD) system.

1. Advanced Architectures

While the current models utilize simpler architectures due to latency constraints, there is potential for exploring more advanced architectures that could improve performance and robustness in real-world applications .

2. Dataset Expansion

Expanding the dataset to include a broader range of scenarios and diverse smartphone camera data could facilitate the exploration of larger and more complex models, enhancing the system's generalizability across different environments and hardware configurations .

3. User-Environment Dynamics

Further research into the behavior of entities in the user’s environment, such as obstacles and pedestrians, could enable the generation of more nuanced navigation paths. This would help bridge the gap between current capabilities and the real-world needs of visually impaired individuals .

4. Multi-Directional Predictions

The current models predict three directional classes (LEFT, RIGHT, FRONT). Future work could extend this to support more nuanced directions, such as turning angles at 10-degree increments, which would provide users with clearer navigation instructions .

5. Integration of Intent Features

Incorporating intent features and GPS signals has shown to enhance model performance, particularly in outdoor scenarios. Continued exploration of these features could further improve navigation assistance in dynamic environments .

These areas of research could significantly advance the effectiveness and reliability of navigation systems for visually impaired individuals.