Topological Deep Learning with State-Space Models: A Mamba Approach for Simplicial Complexes

Marco Montagna, Simone Scardapane, Lev Telyatnikov·September 18, 2024

Summary

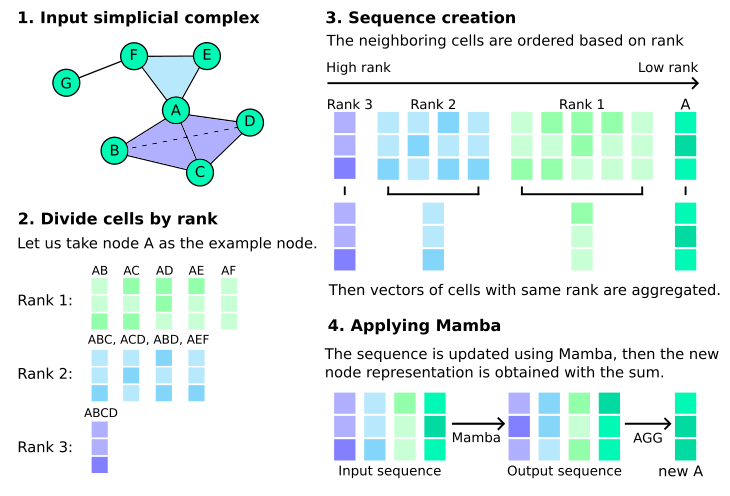

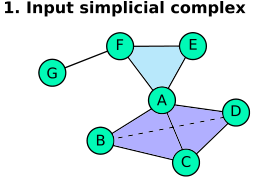

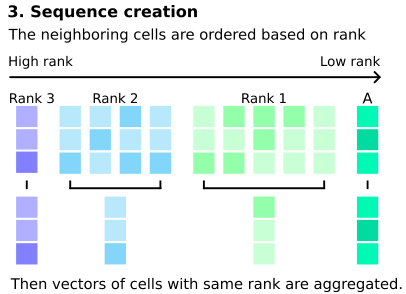

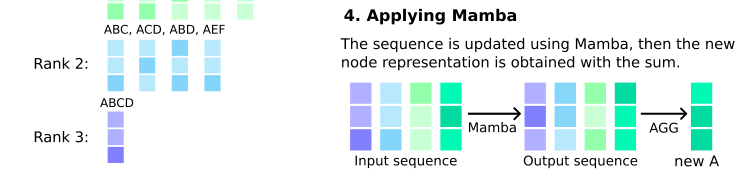

The novel architecture for topological deep learning, focusing on simplicial complexes, introduces a Mamba state-space model-based approach to address limitations of Graph Neural Networks in handling higher-order interactions. This method enables direct communication between all higher-order structures, outperforming state-of-the-art models for simplicial complexes. The paper combines sequence modeling with topological deep learning, using Mamba on simplicial complexes derived from graph datasets. It facilitates efficient information propagation across different ranks in a single step, making it suitable for experiments on various graph datasets converted to simplicial complexes. The proposed approach also evaluates the impact of an efficient batching technique on memory usage, training time, and performance, demonstrating its effectiveness compared to state-of-the-art models for simplicial complexes.

Introduction

Background

Overview of topological data analysis and its relevance to deep learning

Challenges faced by traditional Graph Neural Networks in handling higher-order interactions

Objective

To introduce a new architecture that addresses the limitations of Graph Neural Networks by focusing on simplicial complexes

To present a Mamba state-space model-based approach for topological deep learning

Method

Data Collection

Description of the graph datasets used for the study

Conversion of graph datasets into simplicial complexes

Data Preprocessing

Techniques for preparing simplicial complexes for the Mamba state-space model

Methods for handling higher-order structures in the data

Model Architecture

Detailed explanation of the Mamba state-space model

Integration of sequence modeling with topological deep learning

Description of how the model facilitates efficient information propagation across different ranks in a single step

Evaluation

Techniques for assessing the performance of the proposed model

Comparison with state-of-the-art models for simplicial complexes

Results

Impact of Efficient Batching

Analysis of memory usage, training time, and performance improvements with efficient batching

Experimental Results

Presentation of results on various graph datasets converted to simplicial complexes

Discussion of the model's effectiveness in handling higher-order interactions

Conclusion

Summary of Contributions

Recap of the novel architecture and its advantages over existing models

Future Work

Potential areas for further research and development

Implications

Discussion on the broader impact of the proposed method in the field of topological deep learning

Basic info

papers

machine learning

artificial intelligence

Advanced features

Insights

How does the proposed approach evaluate the impact of an efficient batching technique on memory usage, training time, and performance?

What does the paper combine to enable direct communication between all higher-order structures in the context of simplicial complexes?

What is the main focus of the novel architecture for topological deep learning mentioned in the text?

How does the Mamba state-space model-based approach address limitations of Graph Neural Networks in handling higher-order interactions?