Separating Tongue from Thought: Activation Patching Reveals Language-Agnostic Concept Representations in Transformers

Clément Dumas, Chris Wendler, Veniamin Veselovsky, Giovanni Monea, Robert West·November 13, 2024

Summary

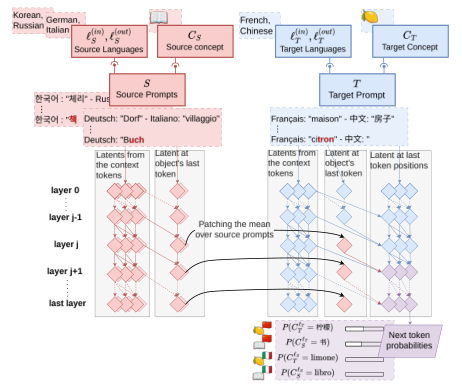

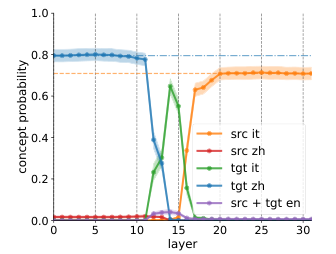

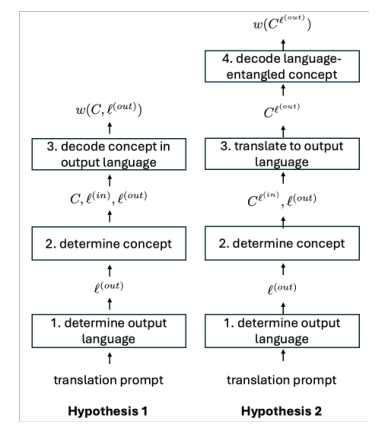

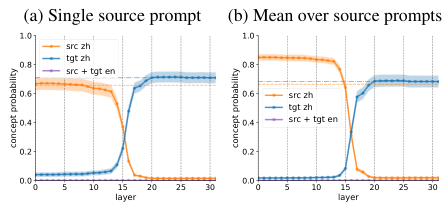

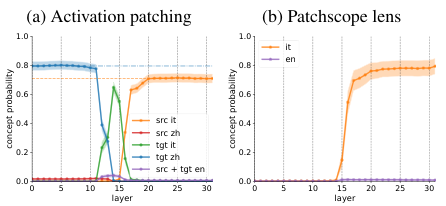

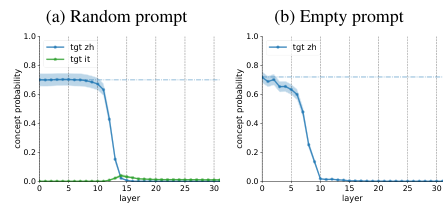

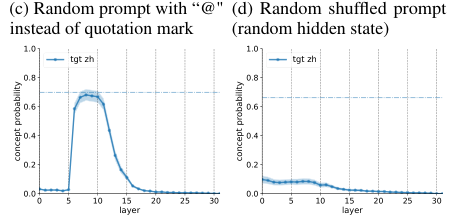

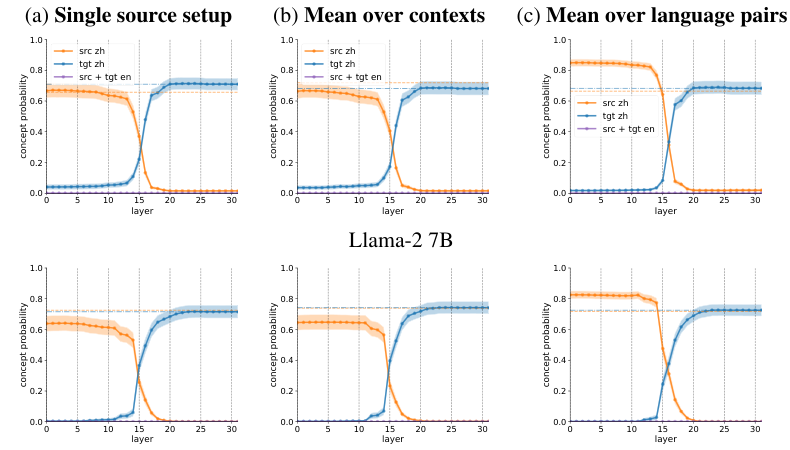

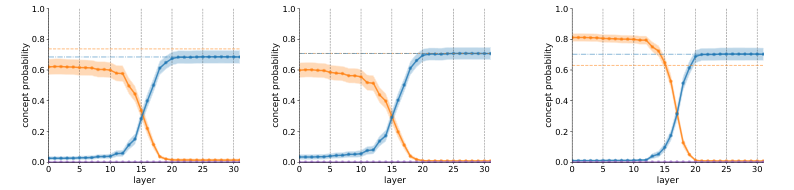

The paper investigates if large language models develop universal concept representations independent of language. Through activation patching in transformer-based models, the study finds that the output language is encoded earlier than the concept. Key experiments demonstrate changing concepts without altering language or vice versa, and patching with language mean improves model performance. Results suggest language-agnostic concept representations exist within the models.

Advanced features