R^2AG: Incorporating Retrieval Information into Retrieval Augmented Generation

Fuda Ye, Shuangyin Li, Yongqi Zhang, Lei Chen·June 19, 2024

Summary

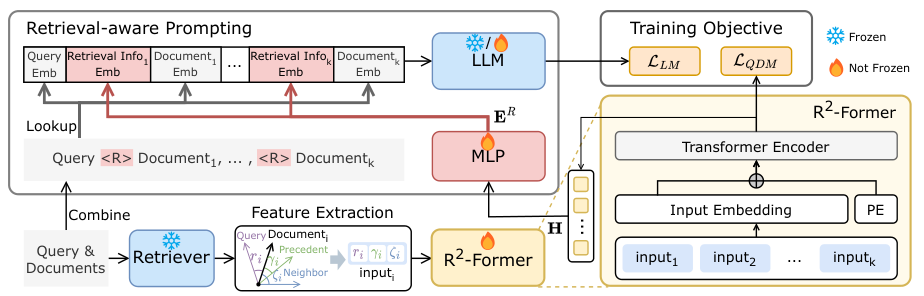

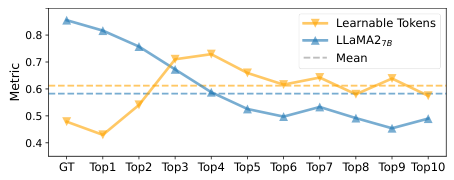

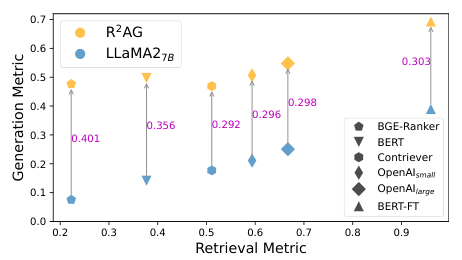

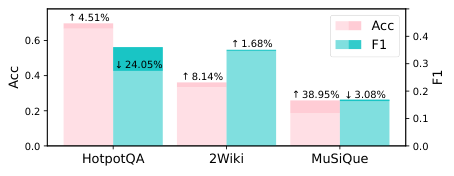

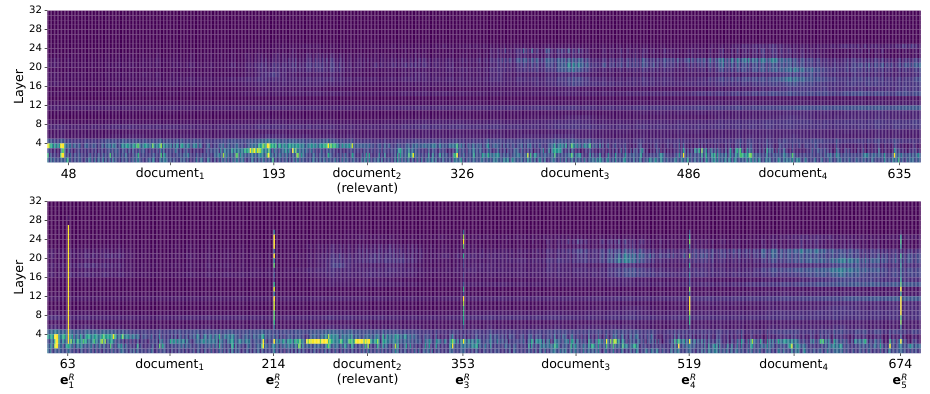

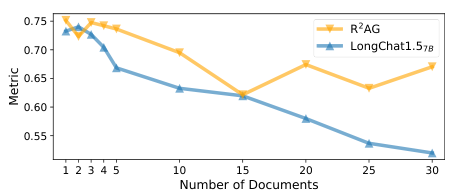

This paper introduces R2AG, a retrieval-enhanced generation framework that addresses the semantic gap between large language models and retrievers. R2AG combines a R2-Former for feature extraction from retrievers with a retrieval-aware prompting strategy, improving LLMs' ability to utilize external knowledge, even in low-resource scenarios. Experiments across five diverse datasets demonstrate R2AG's effectiveness, robustness, and efficiency, showing that retrieval information guides LLMs to generate more accurate responses. The model outperforms competitors in question answering tasks, handles varying document counts, and is designed for efficiency with minimal impact on inference time. The study also compares R2AG with other methods, highlighting its competitive performance in handling complex tasks and distinguishing relevant information.

Advanced features