Online Context Learning for Socially-compliant Navigation

Iaroslav Okunevich, Alexandre Lombard, Tomas Krajnik, Yassine Ruichek, Zhi Yan·June 17, 2024

Summary

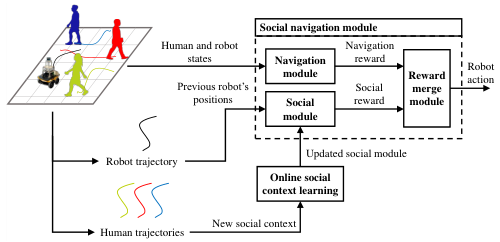

The paper presents an online context learning method for socially-compliant robot navigation, combining deep reinforcement learning (DRL) and online robot learning (ORL). The approach addresses the challenge of adapting to diverse human factors and environments by using a two-layer structure: a DRL layer for basic navigation and an upper layer that updates a social module with real-time human trajectory data. This method improves social efficiency and navigation robustness, outperforming state-of-the-art techniques by 8% in complex scenarios. Key contributions include a Markov decision process formulation, a value-based SARL framework, and a social neural network with online adaptation. Experiments with simulated and real robots demonstrate the method's effectiveness in maintaining social distance, adaptability, and improved safety. Future work will focus on long-term real-world evaluations and expanding the system's robustness in various public spaces.

Introduction

Background

Evolution of socially-aware robot navigation

Challenges in adapting to human factors and environments

Objective

To develop a method that combines DRL and ORL for real-time adaptation

Improve social efficiency and navigation robustness

Outperform state-of-the-art techniques by 8% in complex scenarios

Method

Deep Reinforcement Learning (DRL) Layer

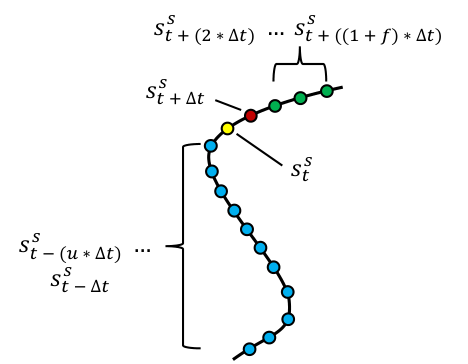

Markov Decision Process (MDP) Formulation

Definition of states, actions, and rewards

Exploration-exploitation trade-off

Value-Based Reinforcement Learning

Q-learning or SARSA for navigation decisions

Online Robot Learning (ORL) Layer

Social Module Update

Real-time data collection of human trajectories

Incorporation into the social neural network

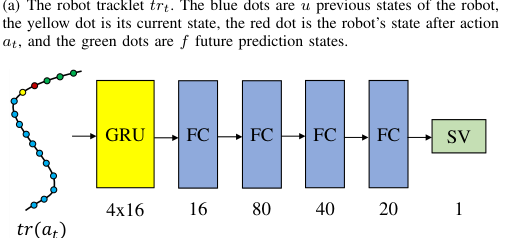

Social Neural Network

Architecture and design for social context understanding

Online adaptation mechanism

Performance Evaluation

Experimental Setup

Simulated and real robot platforms

Metrics: social distance, adaptability, safety

Results and Comparison

State-of-the-art technique comparison (8% improvement)

Complex scenario analysis

Future Work

Long-Term Real-World Evaluations

Deployment in diverse public spaces

Data collection and performance analysis

System Robustness Expansion

Addressing new challenges and scenarios

Continuous improvement and adaptation

Conclusion

Summary of key contributions

Implications for socially-compliant robotics

Potential applications and future research directions

Basic info

papers

robotics

artificial intelligence

Advanced features

Insights

How does the two-layer structure in the approach address the challenge of adapting to diverse human factors and environments?

What method does the paper propose for socially-compliant robot navigation?

What are the key contributions of the paper, as mentioned in the text?

By how much does the presented method outperform state-of-the-art techniques in complex scenarios?