OLMES: A Standard for Language Model Evaluations

Yuling Gu, Oyvind Tafjord, Bailey Kuehl, Dany Haddad, Jesse Dodge, Hannaneh Hajishirzi·June 12, 2024

Summary

OLMES is a standardized, open, and reproducible framework for evaluating large language models, addressing inconsistencies in performance assessments due to varying evaluation practices. It focuses on tasks like multiple-choice question answering, providing guidelines for prompt formatting, in-context examples, normalization, and task formulation to ensure fair and meaningful comparisons between models. OLMES selects benchmark tasks, evaluates 15 open-source LLMs, and offers a unified approach with clear documentation and code availability. The framework aims to enhance scientific credibility by standardizing evaluation methods and revealing the true capabilities of models across different sizes.

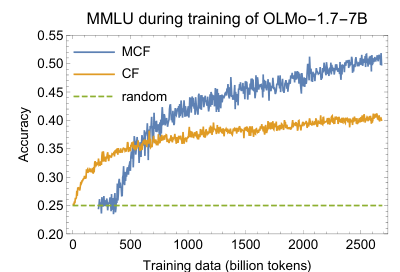

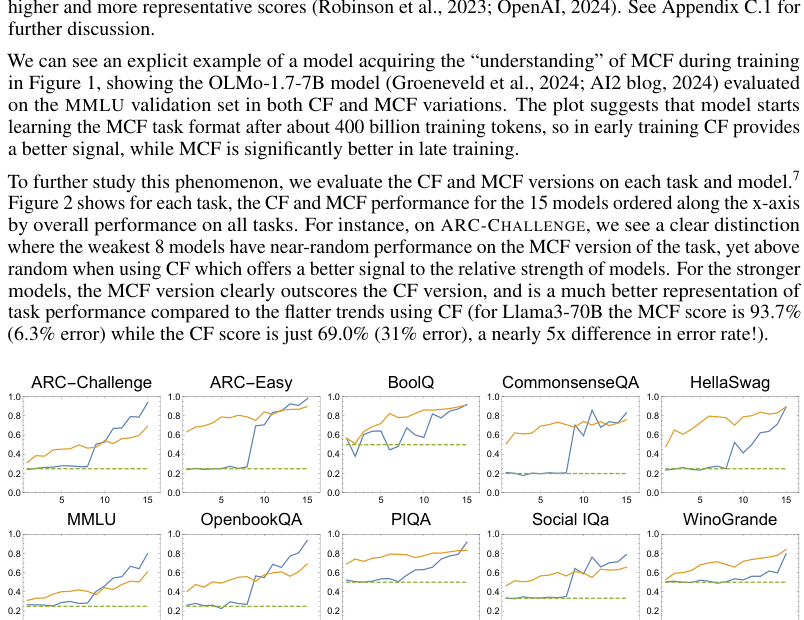

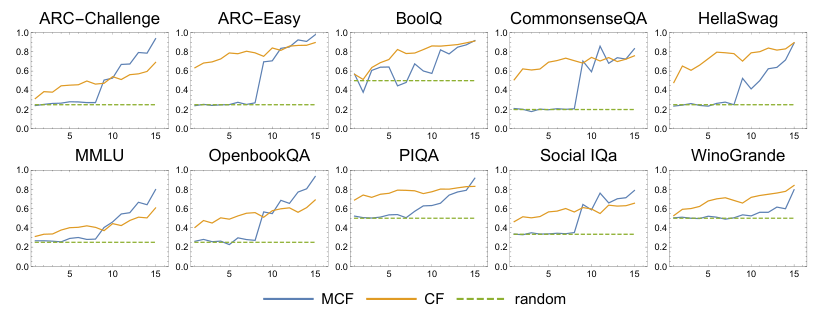

Advanced features