OKAMI: Teaching Humanoid Robots Manipulation Skills through Single Video Imitation

Jinhan Li, Yifeng Zhu, Yuqi Xie, Zhenyu Jiang, Mingyo Seo, Georgios Pavlakos, Yuke Zhu·October 15, 2024

Summary

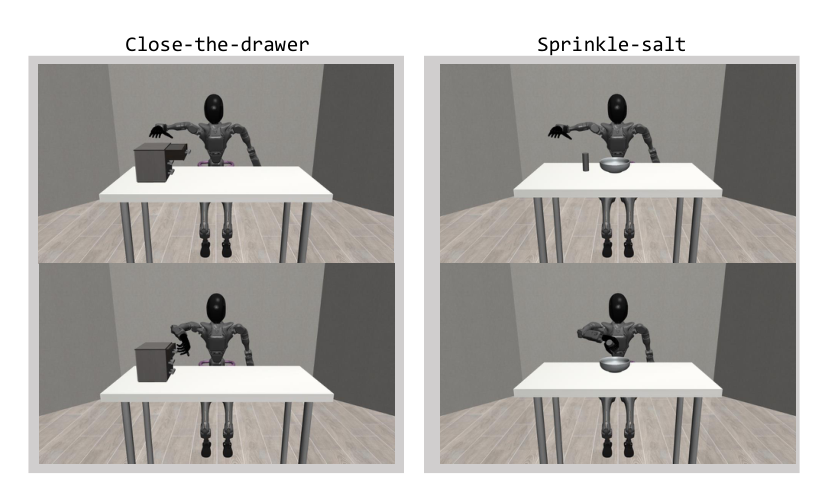

OKAMI is a method enabling humanoid robots to learn manipulation skills from single RGB-D video demonstrations. It uses an open-world vision pipeline to identify task-relevant objects and separately retargets body motions and hand poses, achieving strong generalizations across varying conditions. OKAMI's rollout trajectories are leveraged to train closed-loop visuomotor policies, achieving an average success rate of 79.2% without labor-intensive teleoperation.

Introduction

Background

Overview of humanoid robots and their challenges in learning manipulation skills

Importance of visual learning methods in robotics

Objective

To present OKAMI, a method that enables humanoid robots to learn manipulation skills from single RGB-D video demonstrations, focusing on its unique approach and achievements

Method

Data Collection

Description of the RGB-D video dataset used for demonstrations

Techniques for capturing diverse and challenging manipulation scenarios

Data Preprocessing

Methods for filtering and preparing the collected data for OKAMI's learning process

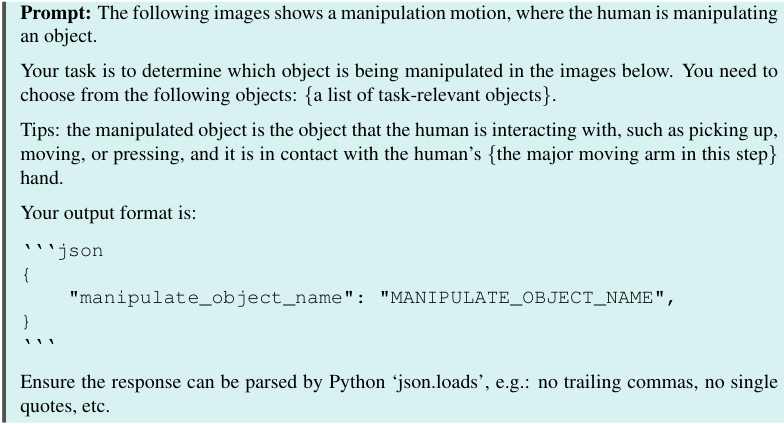

Handling of object identification and segmentation in the video data

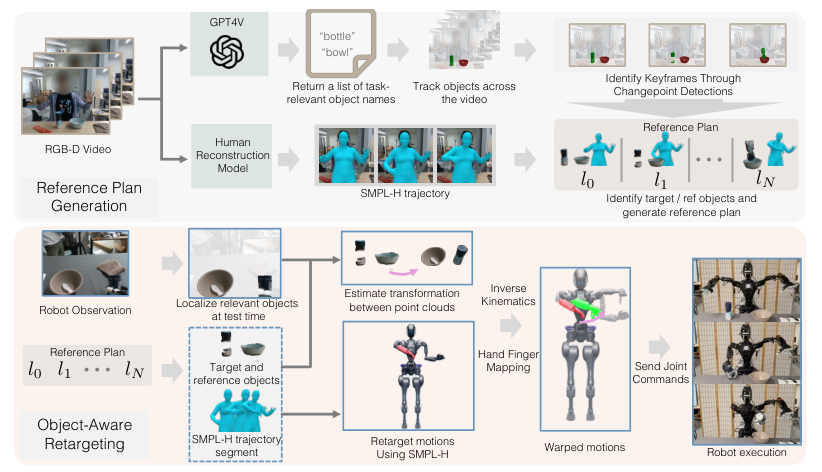

OKAMI Pipeline

Task-Object Identification

Explanation of the open-world vision pipeline for recognizing task-relevant objects

Algorithms and techniques for object detection and tracking in dynamic scenes

Motion and Pose Retargeting

Description of how body motions and hand poses are separately retargeted from the demonstrations

Discussion on the adaptation of motions and poses to different objects and environments

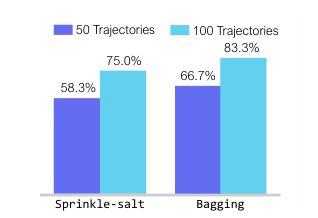

Trajectory Rollout and Policy Training

Explanation of how rollout trajectories are generated from the demonstrations

Training of closed-loop visuomotor policies using the generated trajectories

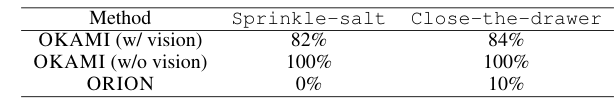

Evaluation metrics and results, including the average success rate of 79.2%

Results and Applications

Performance Analysis

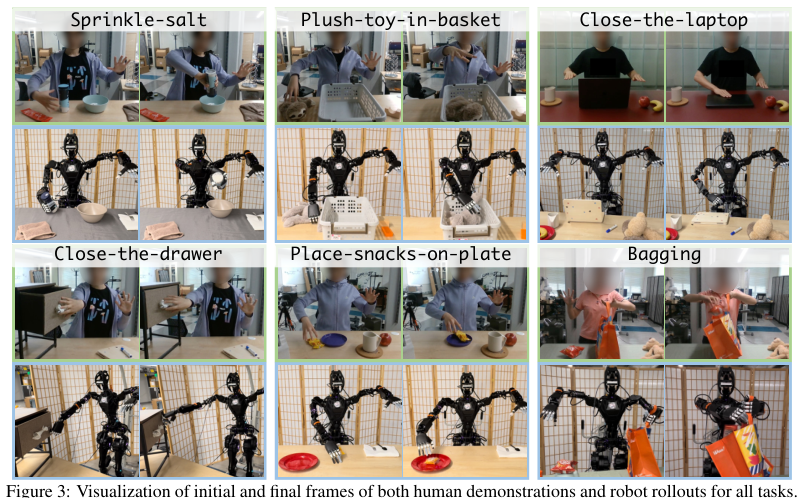

Detailed analysis of OKAMI's performance across various conditions and tasks

Comparison with existing methods in terms of generalization and efficiency

Case Studies

Presentation of specific examples where OKAMI was successfully applied

Discussion on the challenges overcome and the improvements achieved

Future Directions

Exploration of potential enhancements to the OKAMI method

Discussion on its applicability to more complex and varied manipulation tasks

Conclusion

Summary of OKAMI's contributions

Implications for the field of robotics and AI

Call for further research and development

Basic info

papers

computer vision and pattern recognition

robotics

machine learning

artificial intelligence

Advanced features