Meta-Learning for Speeding Up Large Model Inference in Decentralized Environments

Yuzhe Yang, Yipeng Du, Ahmad Farhan, Claudio Angione, Yue Zhao, Harry Yang, Fielding Johnston, James Buban, Patrick Colangelo·October 28, 2024

Summary

In decentralized AI systems, a meta-learning-based framework optimizes inference acceleration, surpassing traditional methods. This system selects the best acceleration techniques, streamlining decision-making and enhancing efficiency. Meta-learning holds potential to revolutionize inference acceleration, promoting democratic and cost-effective AI solutions. The Nesa chain, integrated with various components, uses a blockchain network for distributed model inference, prioritizing tasks based on demand and response time. MetaInf, a novel meta-learning framework, optimizes the selection of inference acceleration methods, making high-performance AI more accessible.

Introduction

Background

Overview of decentralized AI systems

Importance of inference acceleration in AI systems

Objective

To explore how a meta-learning-based framework optimizes inference acceleration in decentralized AI systems

To highlight the potential of meta-learning in revolutionizing inference acceleration for democratic and cost-effective AI solutions

Meta-Learning in Decentralized AI Systems

Theoretical Foundations of Meta-Learning

Explanation of meta-learning concepts

How meta-learning adapts to different tasks and environments

Application in Inference Acceleration

Meta-learning's role in selecting the best acceleration techniques

Benefits over traditional methods in terms of efficiency and decision-making

The Nesa Chain: A Blockchain Network for Distributed Inference

Architecture of the Nesa Chain

Components of the Nesa chain

How it supports distributed model inference

Prioritization Mechanisms

Task prioritization based on demand and response time

Integration of blockchain for secure and transparent operations

MetaInf: Optimizing Inference Acceleration

Design and Implementation of MetaInf

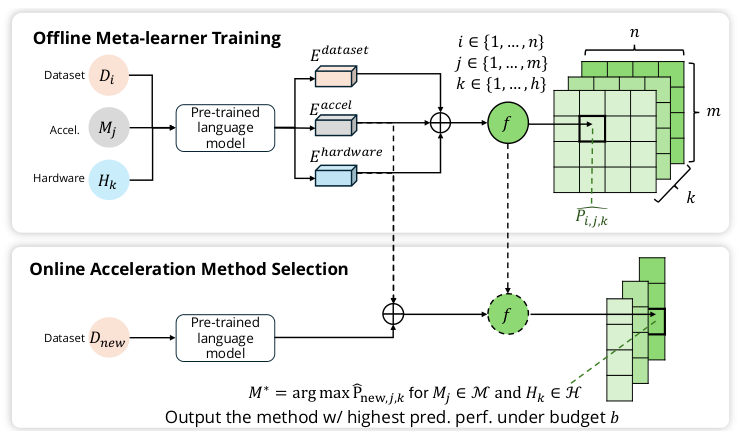

Overview of MetaInf as a meta-learning framework

How MetaInf selects and optimizes inference acceleration methods

Performance and Accessibility

Enhancements in high-performance AI accessibility

Cost-effectiveness and democratization of AI solutions

Case Studies and Applications

Real-world Implementation

Examples of decentralized AI systems utilizing MetaInf

Case studies demonstrating improved inference acceleration

Future Directions

Potential advancements in meta-learning for decentralized AI

Challenges and opportunities in scaling and integrating meta-learning frameworks

Conclusion

Summary of Key Findings

Implications for AI Research and Development

Call to Action for Further Exploration

Future Research Directions

Basic info

papers

distributed, parallel, and cluster computing

machine learning

artificial intelligence

Advanced features