LangTopo: Aligning Language Descriptions of Graphs with Tokenized Topological Modeling

Zhong Guan, Hongke Zhao, Likang Wu, Ming He, Jianpin Fan·June 19, 2024

Summary

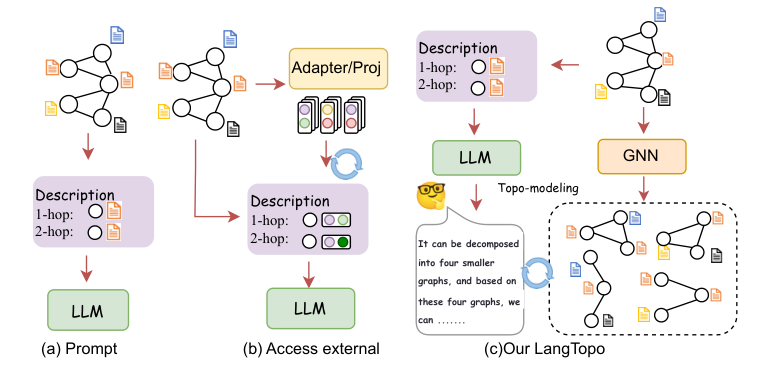

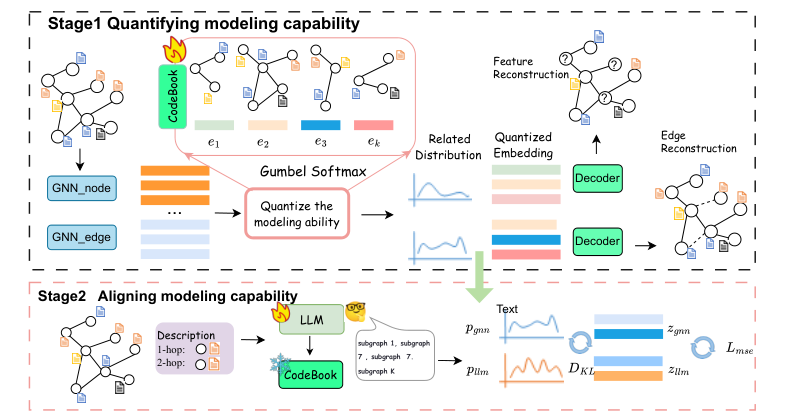

The paper "LangTopo: Aligning Language Descriptions of Graphs with Tokenized Topological Modeling" addresses the challenge of large language models (LLMs) in understanding graph data by proposing a framework that bridges the gap between LLMs and graph neural networks (GNNs). LangTopo constructs a codebook to quantify GNNs' graph structure modeling and aligns LLMs with GNNs at the token level, enabling LLMs to learn graph structure independently. This method enhances LLM performance on graph tasks, outperforming existing approaches on datasets like Cora, Pubmed, and Arxiv-2023. The study also compares various models, including traditional GNNs, transformers, and LLM-enhanced methods, with LangTopo demonstrating superior accuracy. The research highlights the potential of integrating LLMs with GNNs for improved graph analysis and suggests avenues for future work on generalizability and scalability.

Introduction

Background

[Challenges with LLMs in graph understanding]

[Importance of GNNs for graph data analysis]

Objective

[Goal: Bridge the gap between LLMs and GNNs]

[Focus: Token-level alignment for independent graph structure learning]

Method

Data Collection

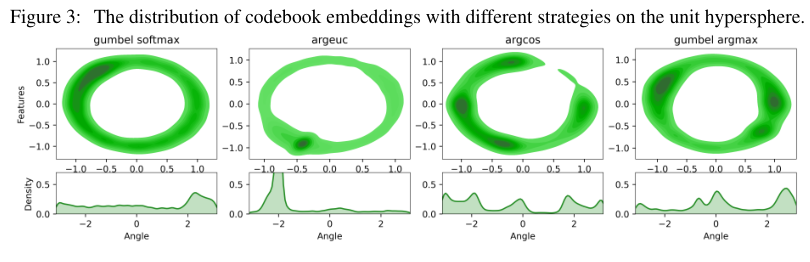

[Construction of a codebook for GNN graph structure representation]

[Selection of datasets: Cora, Pubmed, Arxiv-2023]

Data Preprocessing

[Tokenization of graph structures for LLM compatibility]

[Conversion of graph data into language-like descriptions]

LangTopo Framework

LLM-GNN Alignment

[Embedding GNN representations into token sequences]

[Fine-tuning LLMs on graph-related language descriptions]

Independent Learning

[Training LLMs to understand graph structure without GNNs]

[Evaluation on graph task performance]

Model Comparison

[Traditional GNNs vs. Transformers]

[LangTopo-enhanced methods vs. existing approaches]

[Performance analysis and superiority of LangTopo]

Experiments and Results

[Quantitative evaluation on benchmark datasets]

[Accuracy improvements over baseline models]

Discussion

Advantages and Limitations

[Strengths: Enhanced LLM performance, generalizability]

[Weaknesses: Scalability, potential trade-offs]

[Future directions]

Applications and Real-world Implications

[Potential use cases in various domains]

[Enhanced graph analysis and understanding]

Conclusion

[Summary of key findings]

[Implications for the integration of LLMs and GNNs]

[Future research opportunities]

Basic info

papers

computation and language

machine learning

artificial intelligence

Advanced features

Insights

How does LangTopo's performance compare to traditional GNNs, transformers, and other LLM-enhanced methods in graph task performance?

What are the key datasets mentioned in the paper where LangTopo outperforms existing approaches?

How does LangTopo bridge the gap between LLMs and GNNs, specifically in terms of their interaction at the token level?

What problem does the LangTopo paper address in the context of LLMs and graph data understanding?