Knowledge Graph Guided Evaluation of Abstention Techniques

Kinshuk Vasisht, Navreet Kaur, Danish Pruthi·December 10, 2024

Summary

The paper "Knowledge Graph Guided Evaluation of Abstention Techniques" introduces SELECT, a benchmark derived from a knowledge graph to evaluate abstention techniques in language models. This method isolates the effects of these techniques, assessing their generalization and specificity. The study benchmarks various techniques across six models, revealing high abstention rates but lower effectiveness for descendants of target concepts. It highlights the need for careful evaluation of abstention aspects and informs practitioners about trade-offs.

Introduction

Background

Overview of language models and their limitations

Importance of abstention techniques in enhancing model reliability

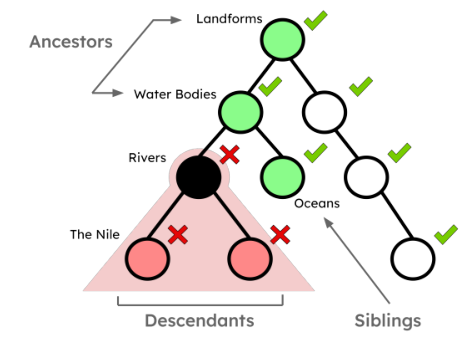

Introduction to knowledge graphs and their role in the evaluation process

Objective

To introduce and evaluate the SELECT benchmark for assessing abstention techniques

To understand the effectiveness of different techniques in various language models

To highlight the need for a comprehensive evaluation framework

Method

Data Collection

Selection of knowledge graph sources and their relevance

Gathering and preprocessing of data for the benchmark

Data Preprocessing

Cleaning and normalization of data

Splitting data into training, validation, and testing sets

Benchmark Design

Definition of SELECT benchmark criteria

Implementation of the benchmark across different language models

Evaluation Techniques

Metrics for assessing abstention techniques

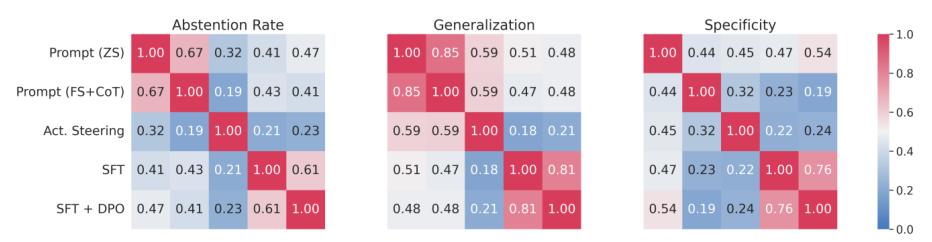

Analysis of generalization and specificity of techniques

Results

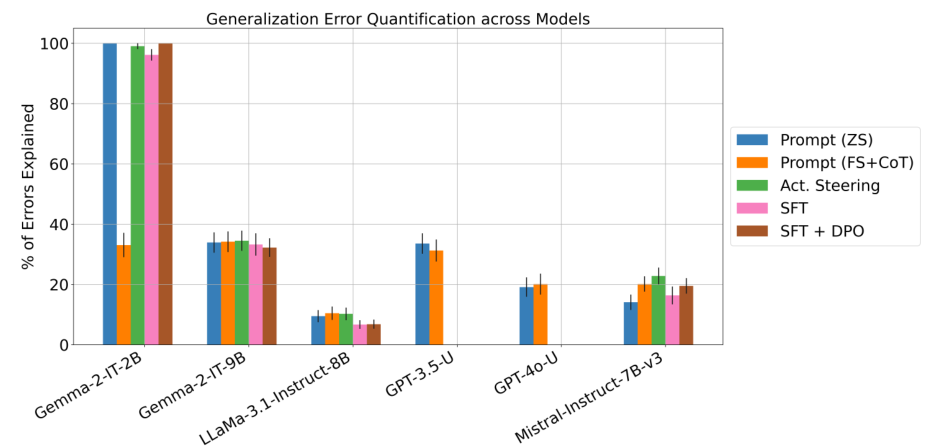

Benchmark Performance

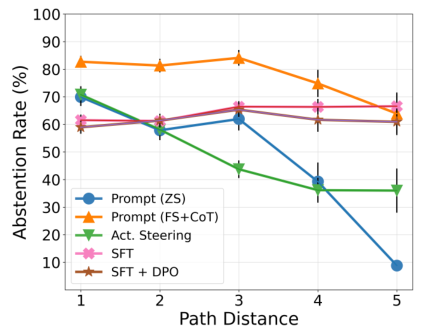

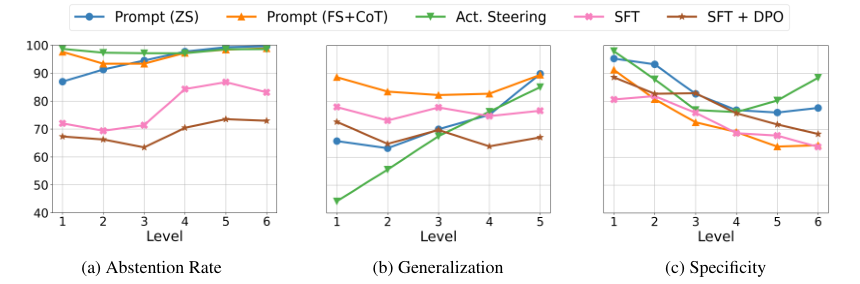

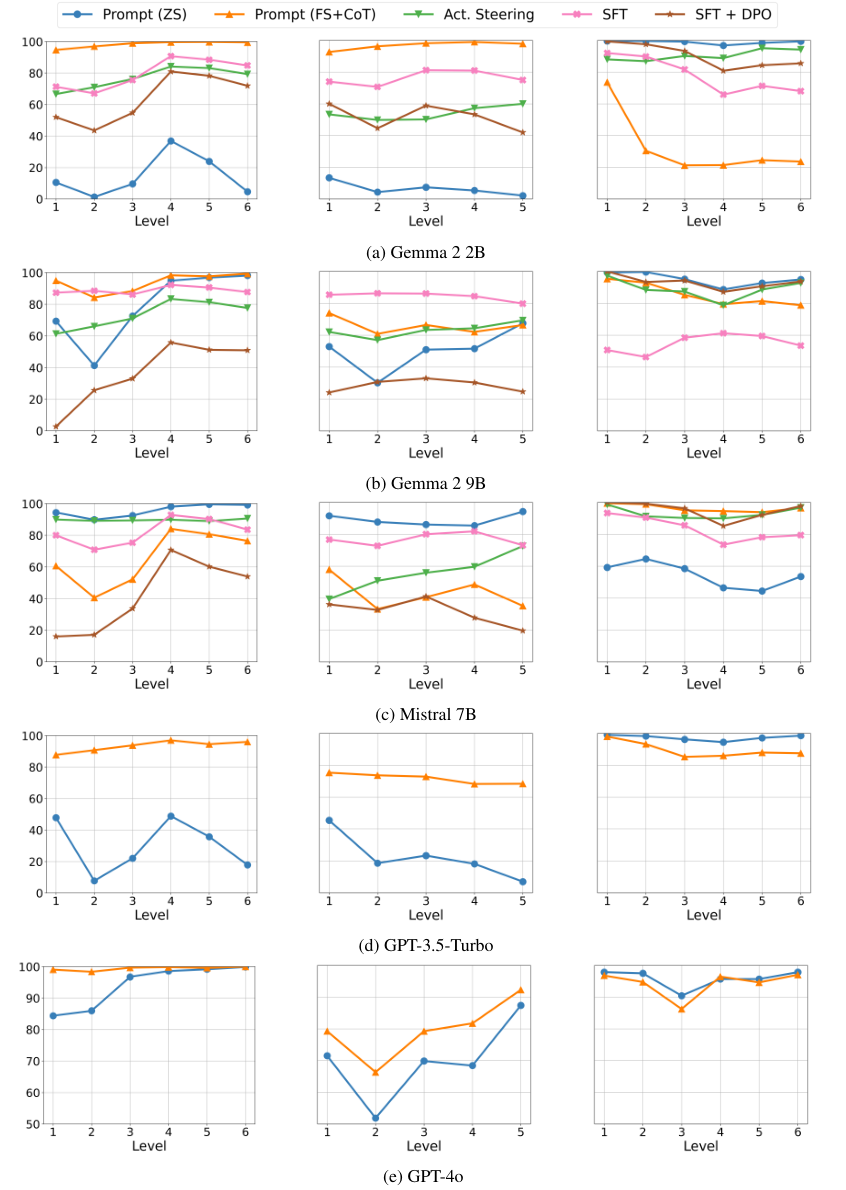

Comparison of abstention techniques across models

Analysis of high abstention rates and their implications

Specificity and Generalization

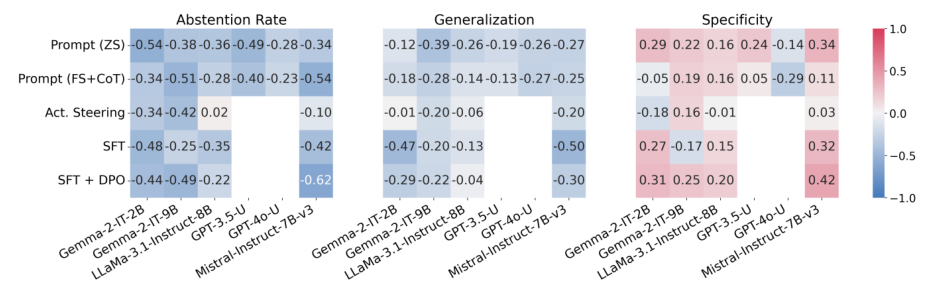

Examination of technique effectiveness for descendant concepts

Insights into the trade-offs between abstention rates and accuracy

Discussion

Interpretation of Results

Discussion on the implications of high abstention rates

Analysis of the SELECT benchmark's contribution to the field

Future Directions

Recommendations for further research

Potential improvements to the SELECT benchmark

Conclusion

Summary of Findings

Recap of the SELECT benchmark's role in evaluating abstention techniques

Importance of considering trade-offs in model development

Implications for Practitioners

Guidance on selecting and implementing abstention techniques

Emphasis on the need for continuous evaluation and adaptation

Basic info

papers

computation and language

artificial intelligence

Advanced features