Incremental Learning of Retrievable Skills For Efficient Continual Task Adaptation

Daehee Lee, Minjong Yoo, Woo Kyung Kim, Wonje Choi, Honguk Woo·October 30, 2024

Summary

IsCiL, an adapter-based Continual Imitation Learning framework, addresses knowledge sharing limitations in non-stationary environments. It incrementally learns shareable skills from various demonstrations, enabling efficient task adaptation. Demonstrations are mapped into state embedding space, allowing retrieval of skills through prototype-based memory. This approach demonstrates robust performance in complex tasks, showing promise for lifelong agents in dynamic environments.

Introduction

Background

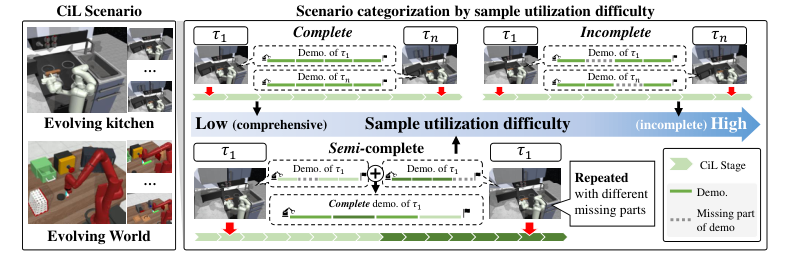

Overview of Continual Imitation Learning (CIoL) and its challenges in non-stationary environments

Explanation of the limitations of existing CIoL frameworks in knowledge sharing

Objective

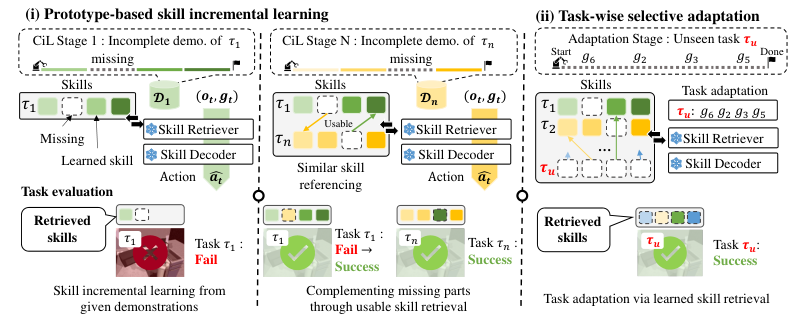

Aim of IsCiL in addressing knowledge sharing limitations and enabling efficient task adaptation

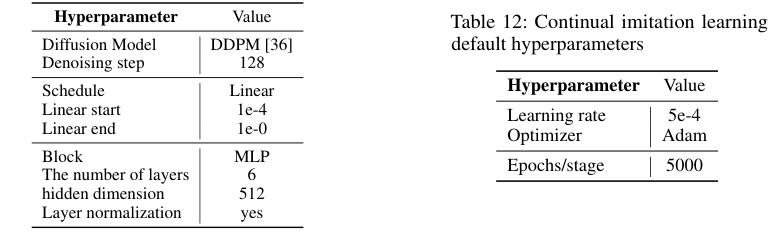

Method

Data Collection

Techniques for gathering diverse demonstrations for skill learning

Data Preprocessing

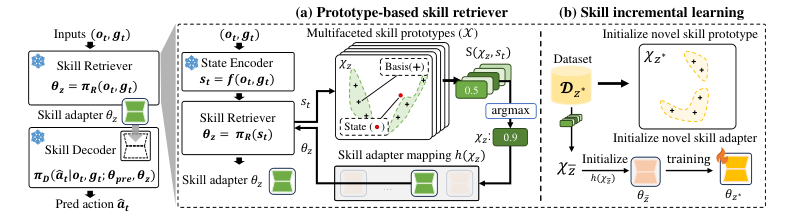

Methods for transforming raw demonstrations into state embeddings

Skill Representation and Retrieval

Prototype-based memory for storing and retrieving skills

Mapping of demonstrations into state embedding space for skill identification

Incremental Learning and Skill Adaptation

Skill Acquisition

Process of learning shareable skills from demonstrations

Skill Sharing

Mechanisms for sharing skills among tasks and agents

Task Adaptation

Strategies for adapting learned skills to new or changed tasks

Performance Evaluation

Complex Task Scenarios

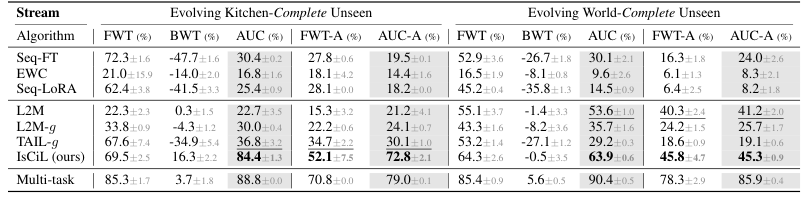

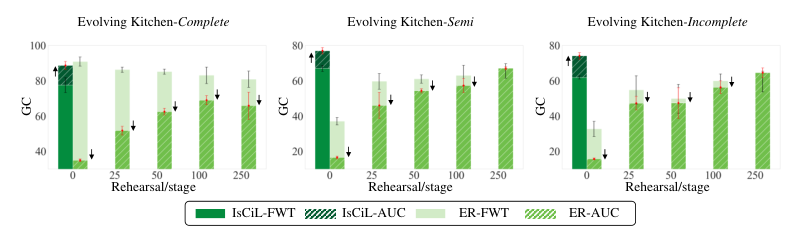

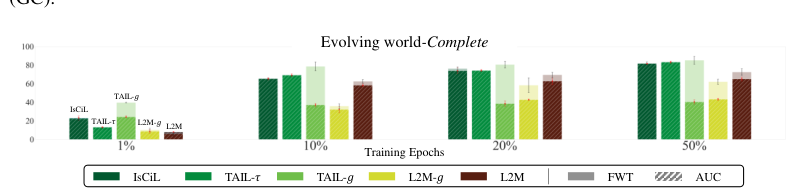

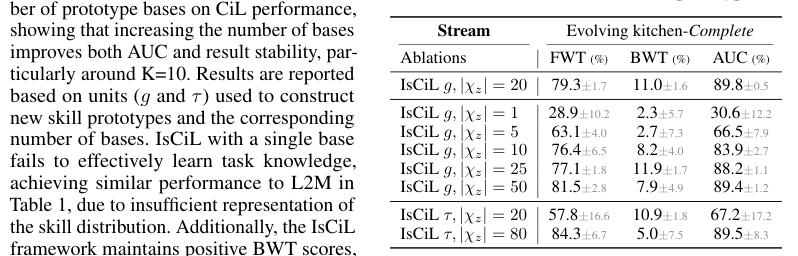

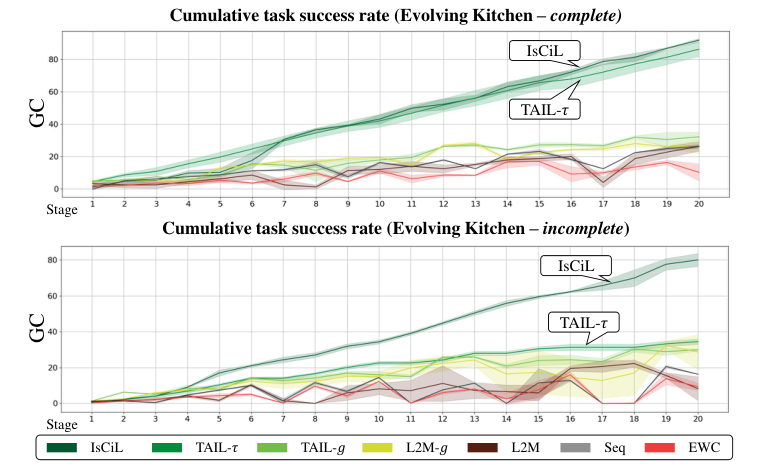

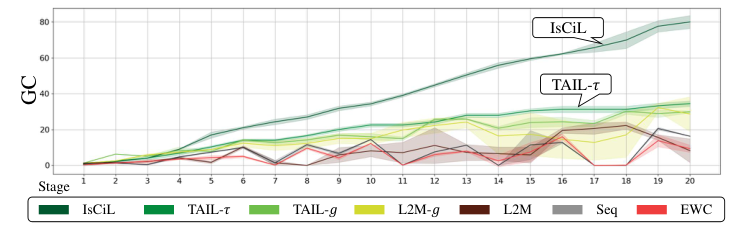

Demonstration of IsCiL's performance in complex, non-stationary environments

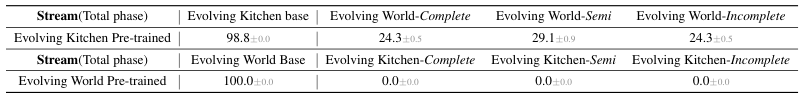

Comparison with Baselines

Metrics for evaluating IsCiL against existing CIoL frameworks

Robustness Analysis

Assessment of IsCiL's ability to maintain performance under varying conditions

Conclusion

Future Directions

Potential enhancements and extensions of IsCiL for lifelong learning agents

Impact on Continual Learning

Discussion on the broader implications for continual learning in dynamic environments

Basic info

papers

machine learning

artificial intelligence

Advanced features

Insights

What is the main focus of IsCiL, the Continual Imitation Learning framework?

How does IsCiL address the limitations of knowledge sharing in non-stationary environments?

What are the demonstrated outcomes of using IsCiL in complex tasks and dynamic environments?

What mechanism does IsCiL use to map demonstrations into state embedding space?