Imitation from Diverse Behaviors: Wasserstein Quality Diversity Imitation Learning with Single-Step Archive Exploration

Xingrui Yu, Zhenglin Wan, David Mark Bossens, Yueming Lyu, Qing Guo, Ivor W. Tsang·November 11, 2024

Summary

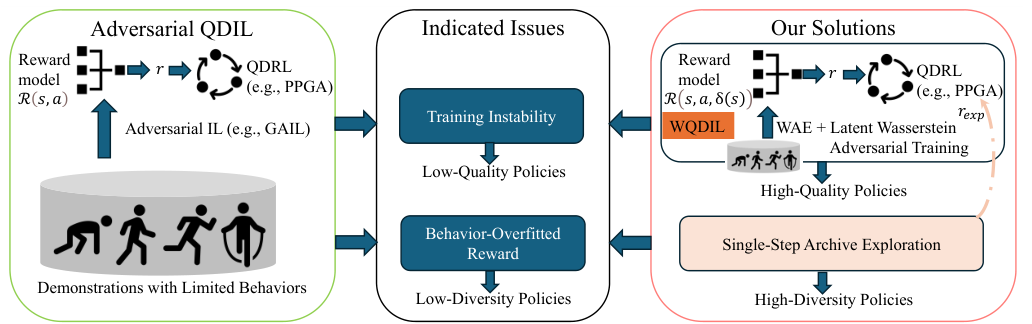

WQDIL tackles the challenge of learning diverse, high-performance behaviors from limited demonstrations. It employs a latent adversarial training method based on Wasserstein Auto-Encoder (WAE) to enhance stability in quality diversity settings. Additionally, it mitigates behavior overfitting through a measure-conditioned reward function with single-step archive exploration. Empirical results show that WQDIL outperforms state-of-the-art imitation learning methods, achieving near-expert or beyond-expert quality diversity performance on MuJoCo continuous control tasks.

Introduction

Background

Overview of quality diversity and its importance in machine learning

Challenges in learning diverse behaviors from limited demonstrations

Objective

Objective of WQDIL: addressing the challenges in quality diversity learning

Aim to achieve high-performance behaviors with limited demonstrations

Method

Latent Adversarial Training

Explanation of the latent adversarial training method

Utilization of Wasserstein Auto-Encoder (WAE) for stability in quality diversity settings

Measure-Conditioned Reward Function

Description of the measure-conditioned reward function

Role in mitigating behavior overfitting through single-step archive exploration

Data Collection and Preprocessing

Methods for data collection

Data preprocessing techniques to prepare for the training process

Implementation

Architecture of WQDIL

Detailed explanation of the WQDIL architecture

Components and their functions in the learning process

Training Process

Overview of the training methodology

Steps involved in the latent adversarial training and reward function application

Results

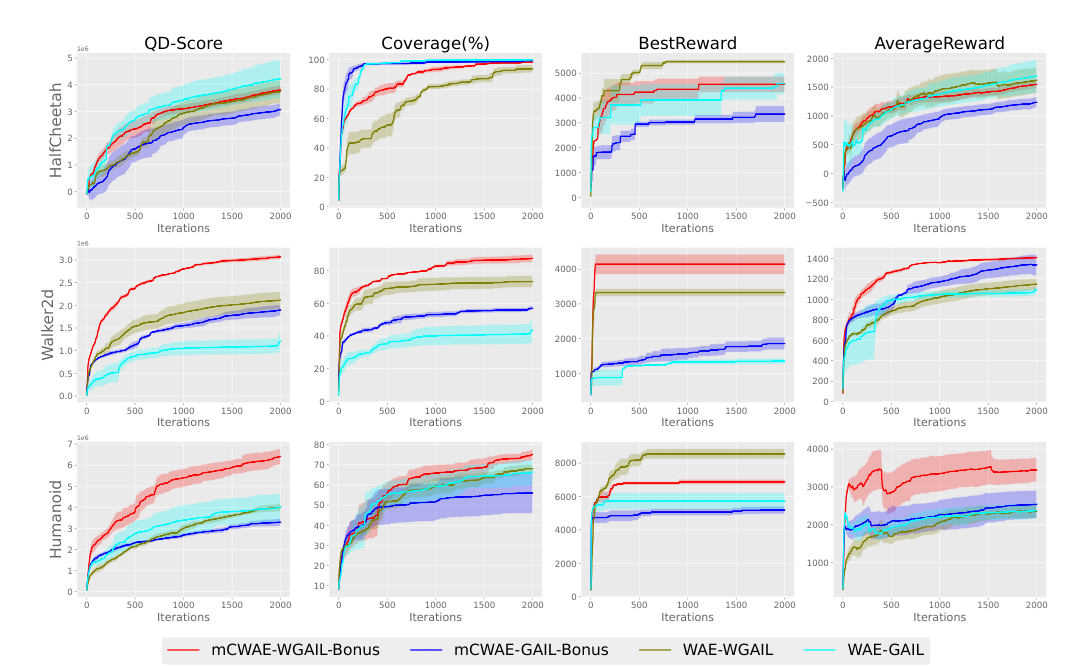

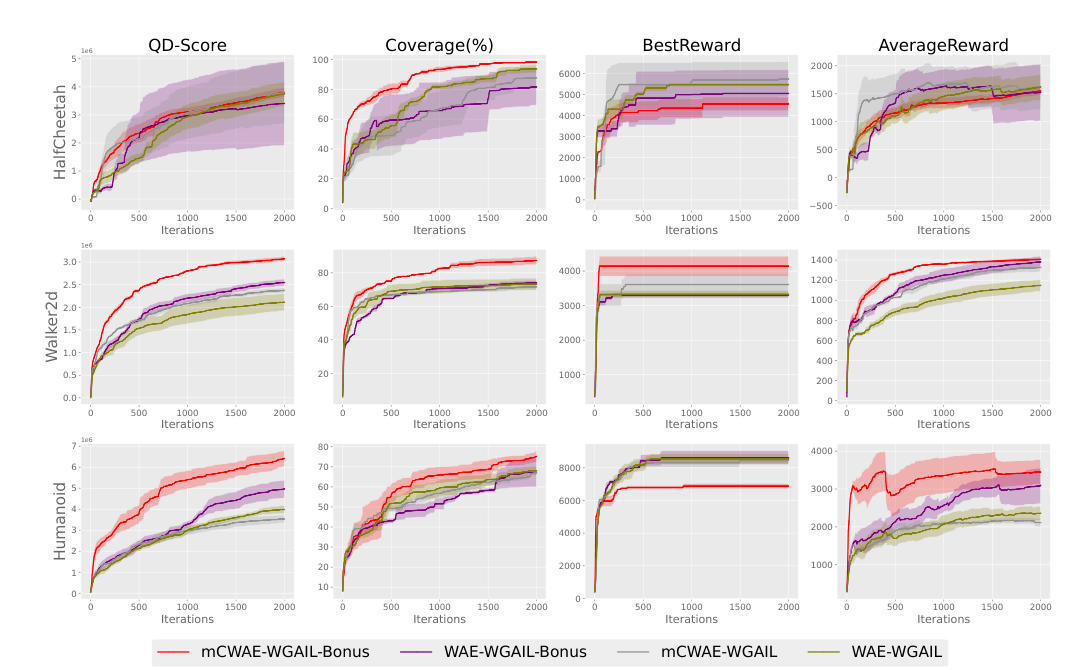

Empirical Evaluation

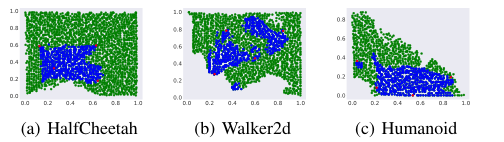

Description of the experimental setup

Metrics used for evaluating WQDIL's performance

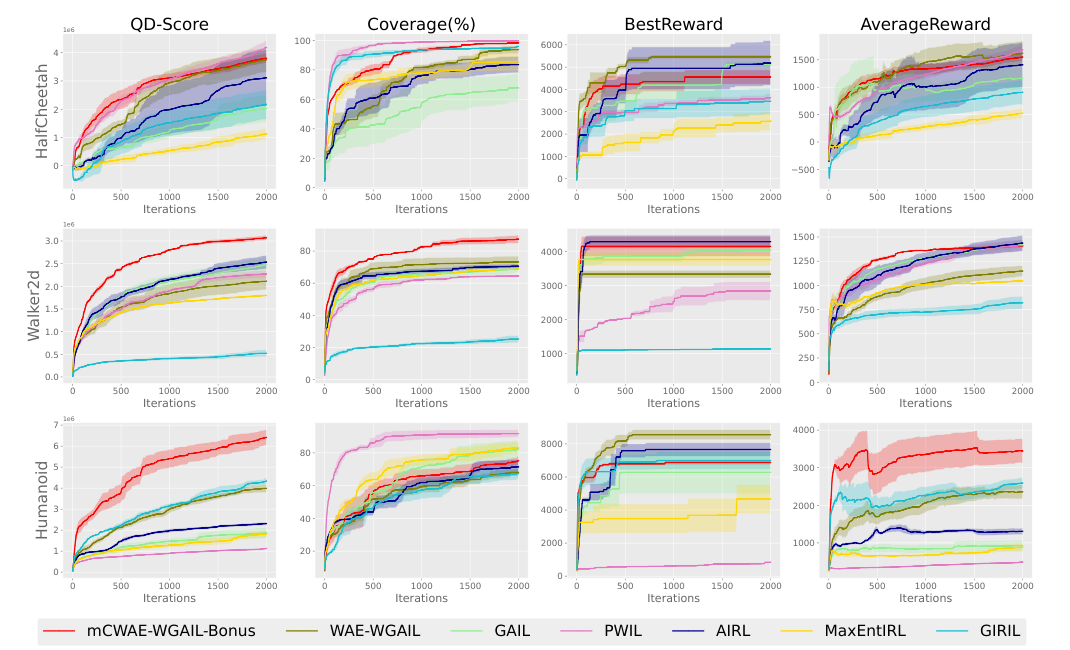

Comparison with State-of-the-Art Methods

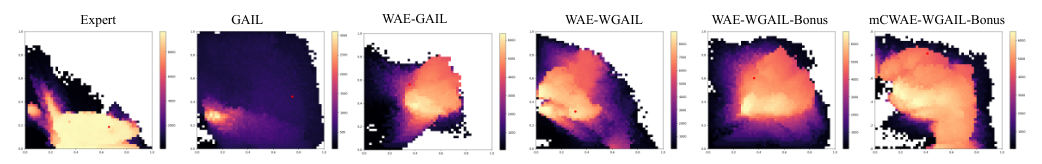

Detailed comparison of WQDIL with other imitation learning methods

Results demonstrating WQDIL's superiority in achieving near-expert or beyond-expert quality diversity performance

Conclusion

Summary of Contributions

Recap of WQDIL's advancements in quality diversity learning

Future Work

Potential areas for further research and development

Impact and Applications

Discussion on the broader implications of WQDIL in various fields

Basic info

papers

machine learning

artificial intelligence

Advanced features

Insights

What are the empirical outcomes of using WQDIL on MuJoCo continuous control tasks compared to state-of-the-art imitation learning methods?

What mechanism does WQDIL employ to prevent behavior overfitting in quality diversity settings?

How does WQDIL utilize the Wasserstein Auto-Encoder (WAE) in its latent adversarial training method?

What is the main focus of WQDIL in the context of learning from demonstrations?