Human-Calibrated Automated Testing and Validation of Generative Language Models

Agus Sudjianto, Aijun Zhang, Srinivas Neppalli, Tarun Joshi, Michal Malohlava·November 25, 2024

Summary

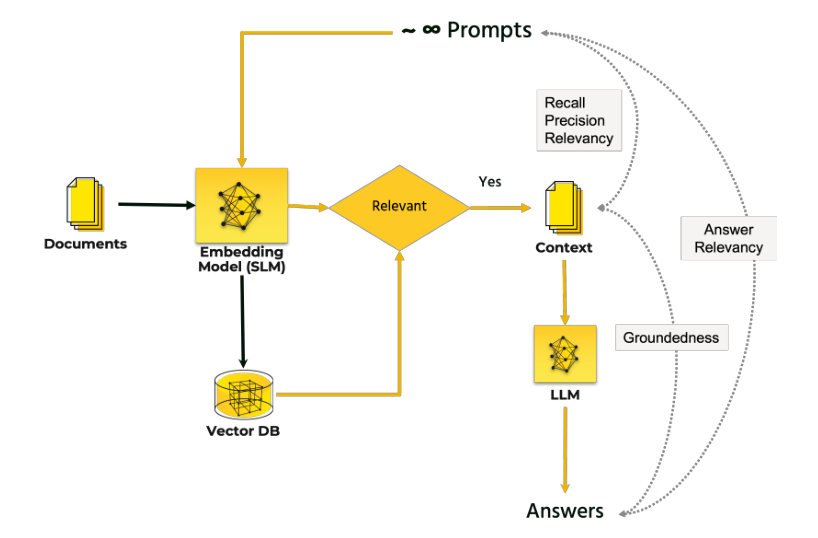

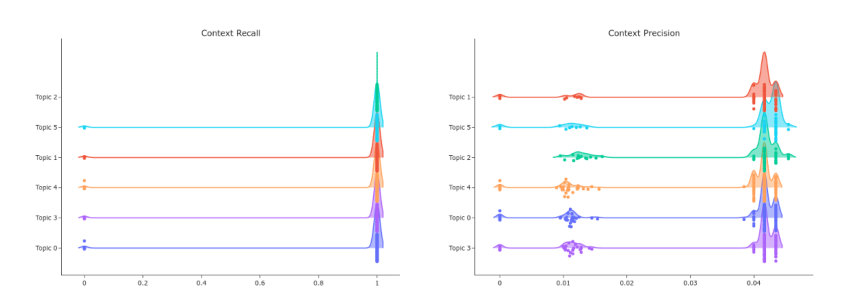

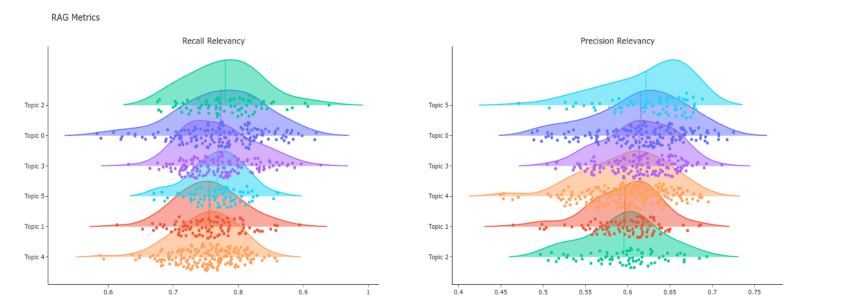

The paper introduces HCAT, a framework for evaluating generative language models, particularly Retrieval-Augmented Generation systems in critical sectors like banking. It addresses the challenge of assessing models with open-ended outputs and subjective quality by integrating automated test generation, embedding-based metrics, and a two-stage calibration approach. The framework also includes robustness testing, targeted weakness identification, and provides a scalable, transparent, and interpretable method for GLM assessment, crucial for applications requiring accuracy, transparency, and regulatory compliance.

Advanced features