GE2E-KWS: Generalized End-to-End Training and Evaluation for Zero-shot Keyword Spotting

Pai Zhu, Jacob W. Bartel, Dhruuv Agarwal, Kurt Partridge, Hyun Jin Park, Quan Wang·October 22, 2024

Summary

GE2E-KWS is a custom keyword spotting framework using speech embeddings for enrollment and serving, enhancing convergence stability and training speed. It surpasses triplet loss approaches, offering low memory footprints and continuous on-device operation. Models like the GE2E conformer outperform large ASR encoders, achieving zero-shot detection for new keywords. The paper introduces the application of generalized end-to-end loss for KWS tasks, addressing limitations in previous methods and improving efficiency. The GE2E conformer model, optimized for performance and size, achieves a 23.6% improvement in AUC over a 7.5GB ASR encoder. The text discusses various models for speech recognition, focusing on the DET curve, AUC, and EER metrics, highlighting the GE2E conformer's superior performance.

Introduction

Background

Overview of keyword spotting (KWS) systems

Importance of speech embeddings in KWS

Challenges in traditional KWS approaches

Objective

To present GE2E-KWS, a novel framework for KWS using speech embeddings

Highlight improvements in convergence stability, training speed, and memory footprint

Demonstrate superior performance compared to triplet loss approaches

Method

Data Collection

Techniques for collecting speech data for enrollment and serving

Data Preprocessing

Methods for preparing data for the GE2E-KWS framework

Importance of preprocessing in enhancing model performance

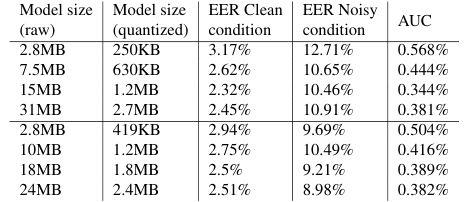

GE2E Conformer Model

Description of the GE2E conformer model

How it surpasses large ASR encoders in performance and size

Explanation of zero-shot detection capability for new keywords

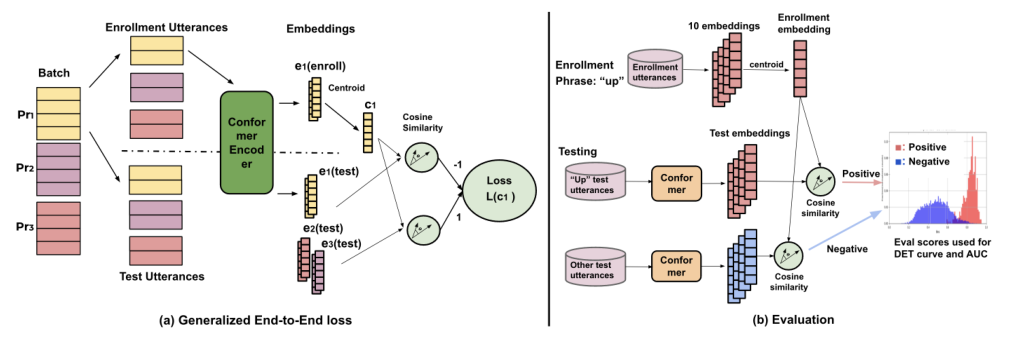

Generalized End-to-End Loss

Introduction to the generalized end-to-end loss for KWS tasks

How it addresses limitations in previous methods

Benefits in terms of efficiency and effectiveness

Performance Evaluation

DET Curve, AUC, and EER Metrics

Explanation of DET curve, AUC, and EER as performance indicators for speech recognition systems

How these metrics are applied in the context of GE2E-KWS

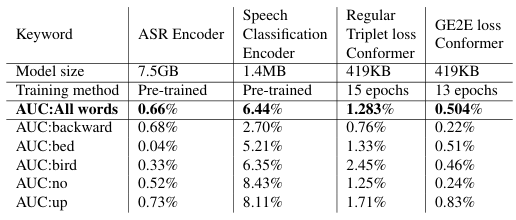

GE2E Conformer Model Performance

Detailed analysis of the GE2E conformer model's performance

Comparison with a 7.5GB ASR encoder in terms of AUC improvement (23.6%)

Conclusion

Summary of GE2E-KWS

Recap of the framework's key features and benefits

Future Directions

Potential areas for further research and development

Opportunities for integrating GE2E-KWS in real-world applications

Basic info

papers

audio and speech processing

machine learning

artificial intelligence

Advanced features

Insights

What are the key advantages of using the GE2E conformer model over large ASR encoders?

What is the main focus of the GE2E-KWS framework?

How does the GE2E-KWS framework demonstrate its performance superiority in the context of speech recognition tasks?

How does the GE2E-KWS framework improve upon triplet loss approaches?