EXIT: Context-Aware Extractive Compression for Enhancing Retrieval-Augmented Generation

Taeho Hwang, Sukmin Cho, Soyeong Jeong, Hoyun Song, SeungYoon Han, Jong C. Park·December 17, 2024

Summary

EXIT, an extractive context compression framework, enhances retrieval-augmented generation (RAG) in question answering (QA) by improving both effectiveness and efficiency. It classifies sentences from retrieved documents, preserving contextual dependencies, enabling parallelizable, context-aware extraction that adapts to query complexity and retrieval quality. Evaluations on QA tasks show EXIT surpasses existing compression methods and uncompressed baselines in accuracy while reducing inference time and token count. EXIT operates as a plug-and-play module, enhancing RAG pipelines without architectural modifications.

Introduction

Background

Overview of Question Answering (QA) systems

Importance of context in QA

Current challenges in retrieval-augmented generation (RAG)

Objective

Enhancing RAG in QA through context compression

Improving effectiveness and efficiency in QA systems

Method

Data Collection

Sources for retrieved documents

Data selection criteria for QA tasks

Data Preprocessing

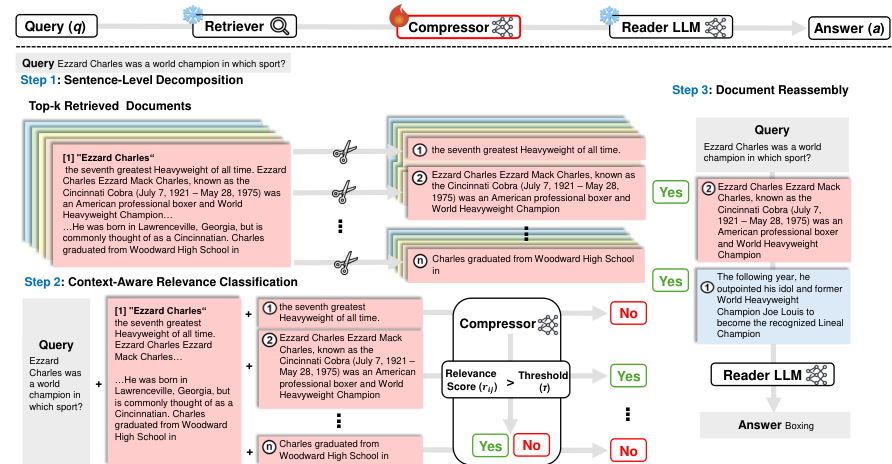

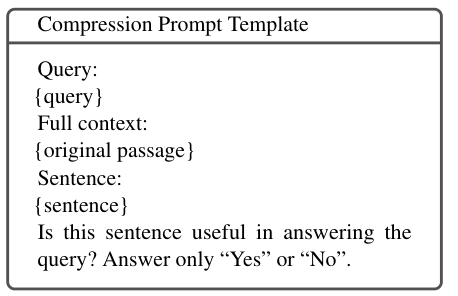

Document representation techniques

Sentence extraction and classification process

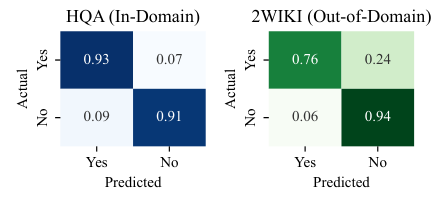

Context-aware Extraction

Mechanism for preserving contextual dependencies

Adaptation to query complexity and retrieval quality

Parallelization

Strategies for parallel processing in context extraction

Benefits of parallelization in efficiency and scalability

Evaluation

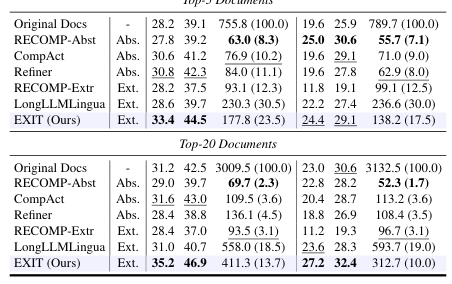

Task Performance

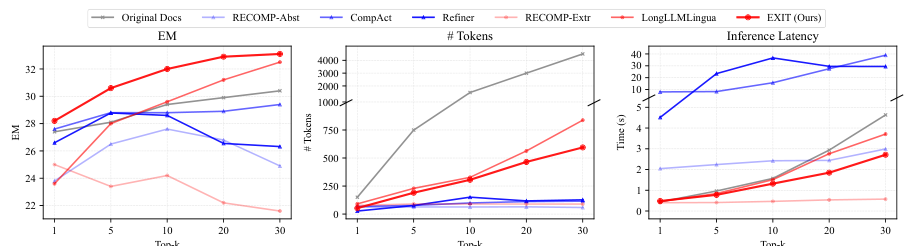

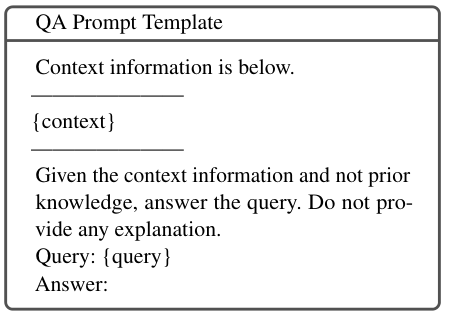

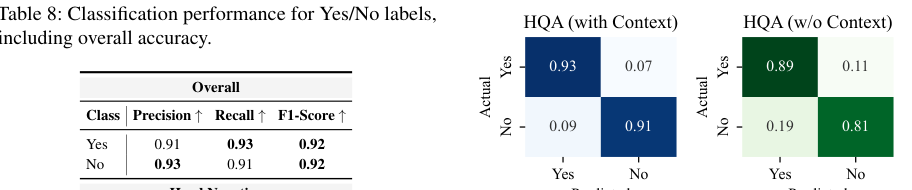

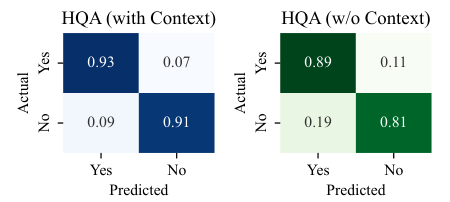

Metrics for assessing accuracy and efficiency

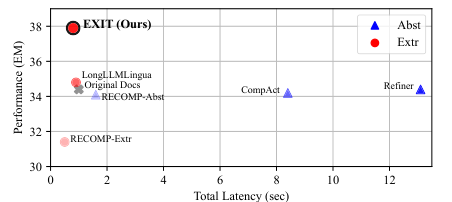

Comparison with existing compression methods and uncompressed baselines

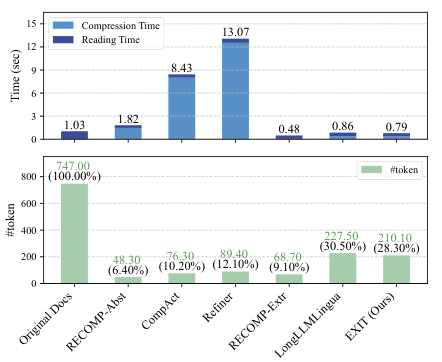

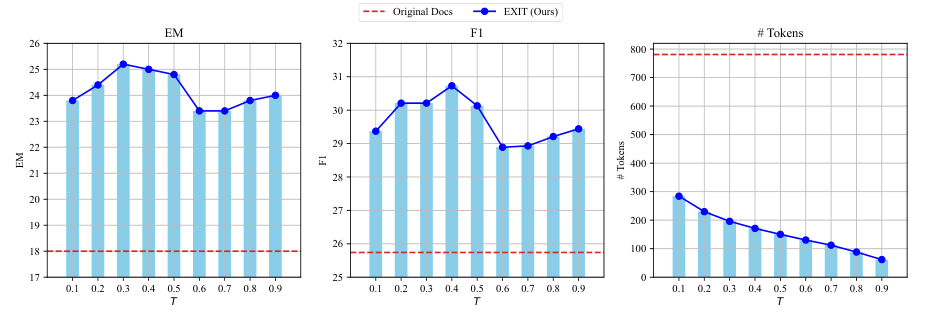

Inference Time and Token Count

Analysis of time reduction and token count minimization

Comparative Analysis

Detailed comparison of EXIT with state-of-the-art approaches

Highlighting improvements in accuracy, time, and token count

Implementation

Plug-and-Play Module

Compatibility with existing RAG pipelines

Minimal architectural requirements for integration

Enhancements to RAG Pipelines

Specific improvements in QA performance

Case studies demonstrating enhanced effectiveness

Conclusion

Summary of Contributions

Key innovations in context compression for QA

Future Directions

Potential areas for further research and development

Impact on QA Systems

Expected advancements in QA technology and applications

Basic info

papers

computation and language

information retrieval

artificial intelligence

Advanced features