DRFormer: Multi-Scale Transformer Utilizing Diverse Receptive Fields for Long Time-Series Forecasting

Ruixin Ding, Yuqi Chen, Yu-Ting Lan, Wei Zhang·August 05, 2024

Summary

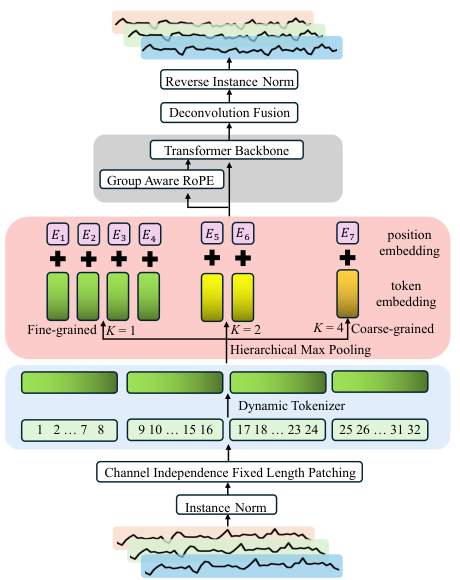

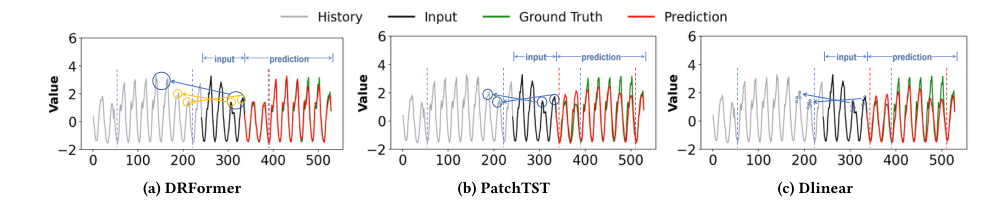

DRFormer, introduced at CIKM '24, is a novel dynamic patching strategy combined with a group-aware Roformer network for long-term time series forecasting. It utilizes a dynamic tokenizer with a dynamic sparse learning algorithm to capture diverse receptive fields and sparse patterns in time series data. The multi-scale Transformer model incorporates multi-scale sequence extraction to capture multi-resolution features, while a group-aware rotary position encoding technique enhances intra- and inter-group position awareness across different temporal scales. Experimental results on various real-world datasets demonstrate DRFormer's superiority compared to existing methods.

The paper also discusses related work, including transformer-based models, CNNs for time-series forecasting, and relative position embedding techniques. DRFormer excels in univariate time series forecasting, outperforming competitors like Koopa, PatchTST, TimesNet, FEDformer, ETSformer, Autoformer, Informer, and Reformer. It achieves the best or second-best performance across different datasets, with the input length set to 96 for each dataset. The prediction lengths vary for different datasets, ranging from 24 to 720.

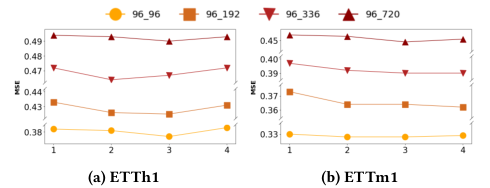

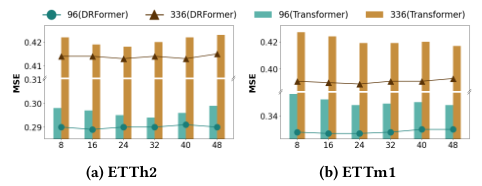

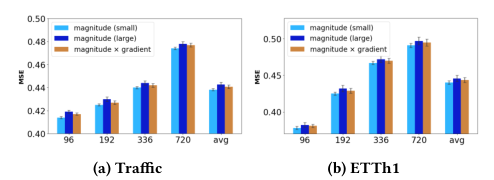

In an ablation study on the Traffic dataset, DRFormer's performance is analyzed with and without multi-scale features (MS), dynamic tokenizer (DT), and RoPE (relative position encoding). The results show that incorporating these features improves forecasting accuracy, with the best performance achieved when all components are utilized. The model's implementation details include using PyTorch for coding, with a hidden dimension size of 128 for ETT datasets and 512 for others. The patch length and stride are set to 16 and 4 for ECL, Traffic, and ETT datasets, and 24 and 2 for the ILI dataset. Dynamic linear parameters are configured with 8 groups and a sparse ratio of 0.5. The update frequency is set to 30% of the iterations per epoch, and the multi-scale transformer uses 3 multi-view sequences with specific stride values.

DRFormer surpasses state-of-the-art methods in multivariate forecasting, achieving significant reductions in Mean Squared Error (MSE) and Mean Absolute Error (MAE) across various datasets. On average, it reduces MSE by 6.20% compared to the best baselines. The paper also discusses various research papers and articles related to time-series forecasting and large language models, highlighting advancements in the field.

In conclusion, DRFormer is a significant contribution to the field of long-term time series forecasting, offering improvements in capturing diverse characteristics across various scales and demonstrating superior performance compared to existing methods. Its dynamic patching strategy, group-aware Roformer network, and multi-scale Transformer model make it a powerful tool for forecasting tasks, with potential applications in various domains such as finance, healthcare, and traffic prediction.

Introduction

Background

Overview of time series forecasting

Importance of long-term forecasting in various domains

Challenges in long-term time series forecasting

Objective

Aim of the research

Contribution of DRFormer to the field of time series forecasting

Method

Dynamic Patching Strategy

Explanation of dynamic patching

Integration with Roformer network

Data Preprocessing

Dynamic tokenizer with dynamic sparse learning algorithm

Capturing diverse receptive fields and sparse patterns

Multi-scale Transformer Model

Multi-scale sequence extraction for multi-resolution features

Group-aware rotary position encoding for enhanced position awareness

Related Work

Transformer-based Models

Overview of transformer models in time series forecasting

Comparison with DRFormer

CNNs for Time-series Forecasting

Comparison with convolutional neural networks

Advantages and limitations

Relative Position Embedding Techniques

Comparison with DRFormer's RoPE technique

Enhancements in intra- and inter-group position awareness

Experimental Results

Performance Comparison

DRFormer's superiority over existing methods

Results on various real-world datasets

Ablation Study

Analysis of DRFormer's components

Impact of multi-scale features, dynamic tokenizer, and RoPE

Implementation Details

Coding and Libraries

Use of PyTorch for implementation

Hidden dimension sizes for different datasets

Model Parameters

Patch length, stride, and dynamic linear parameters

Update frequency and multi-scale transformer configuration

Multivariate Forecasting

Performance Metrics

Mean Squared Error (MSE) and Mean Absolute Error (MAE)

Reduction in error compared to state-of-the-art methods

Research and Articles

Time-series Forecasting

Overview of related research papers

Contributions to the field

Large Language Models

Discussion on advancements in large language models

Relevance to time series forecasting

Conclusion

Summary of Contributions

Recap of DRFormer's features and benefits

Future Work

Potential areas for further research

Applications in various domains

Impact

Significance of DRFormer in the field of time series forecasting

Basic info

papers

machine learning

artificial intelligence

Advanced features

Insights

How does DRFormer utilize a dynamic tokenizer and dynamic sparse learning algorithm to capture diverse receptive fields and sparse patterns in time series data?

What is DRFormer and how does it improve long-term time series forecasting?

How does the group-aware rotary position encoding technique in DRFormer enhance intra- and inter-group position awareness across different temporal scales?