ChaosMining: A Benchmark to Evaluate Post-Hoc Local Attribution Methods in Low SNR Environments

Ge Shi, Ziwen Kan, Jason Smucny, Ian Davidson·June 17, 2024

Summary

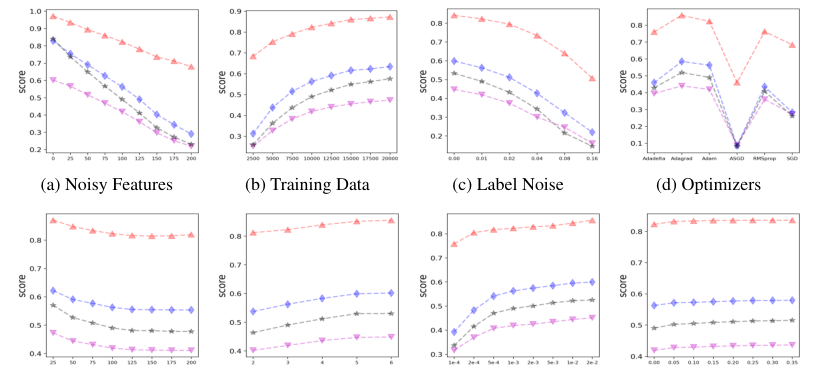

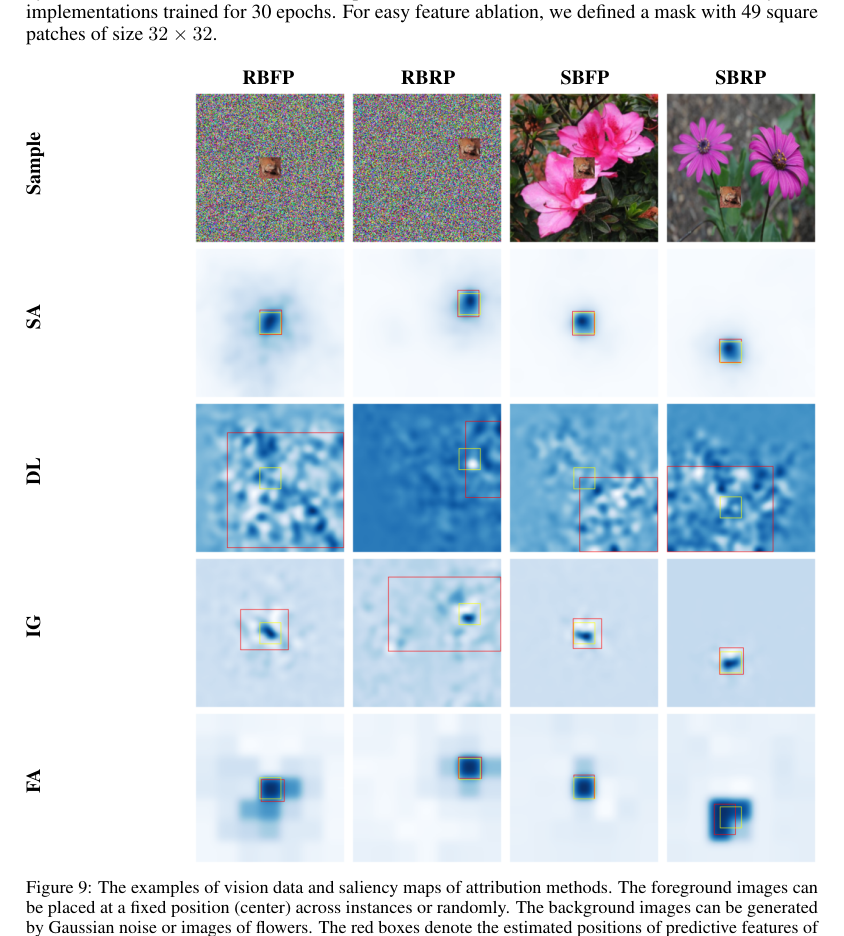

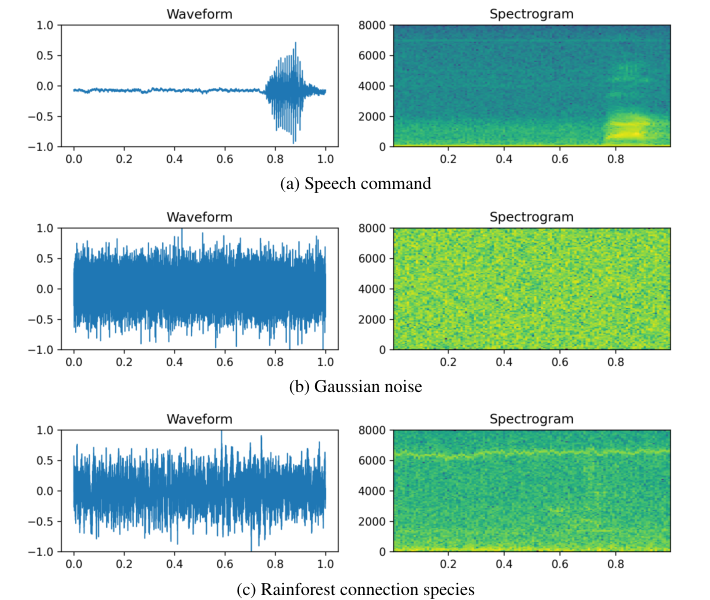

This research evaluates post-hoc attribution methods in low signal-to-noise environments, focusing on their ability to distinguish predictive features from irrelevant ones across symbolic, image, and audio domains. The study develops synthetic datasets and benchmarks various models and attribution techniques (e.g., gradient-based saliency, DeepLift, Integrated Gradients, and Feature Ablation) under different noise conditions. Key findings include:

1. Gradient-based saliency is effective in feature selection but has scalability limitations.

2. Model generalization is linked to the efficacy of attribution methods.

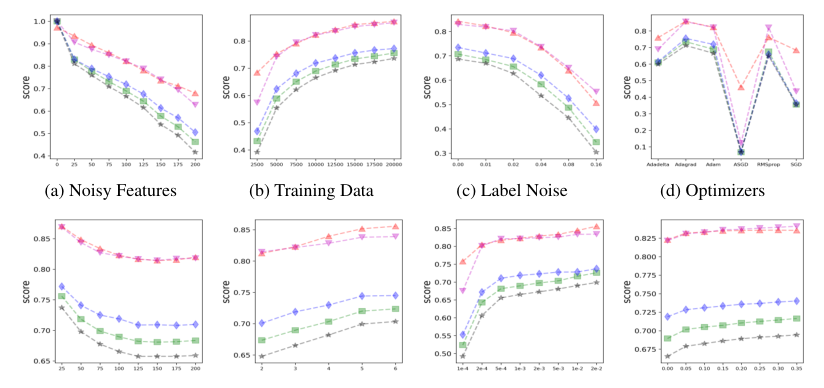

3. A novel extension to Recursive Feature Elimination (RFE) for neural networks is introduced, addressing the lack of empirical evidence on their effectiveness.

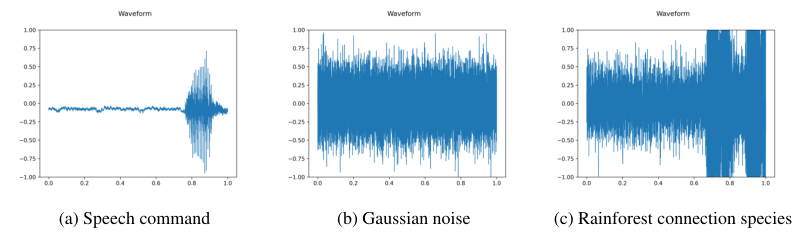

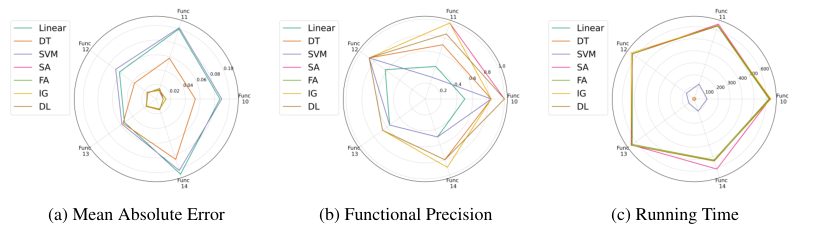

4. Experiments on MLPs, CNNs, ViTs, and RNNs show that performance varies across modalities and noise types, with new metrics like Uniform Score and Functional Precision introduced.

5. Saliency Maps (SA) perform well in low SNR for symbolic data, while AlexNet and Vgg13 struggle due to architectural limitations.

6. RFEwNA, a modified RFE, improves feature selection for neural networks, especially in binary classification tasks.

The research contributes to the understanding of XAI methods in diverse scenarios and highlights the need for future studies on more attribution techniques, diverse model configurations, and noise conditions.

Introduction

Background

Overview of post-hoc attribution methods

Importance of feature selection in low SNR environments

Objective

To assess the performance of attribution techniques in distinguishing predictive features

To identify limitations and strengths across symbolic, image, and audio domains

Method

Data Collection

Synthetic dataset generation

Diverse noise conditions and modalities (symbolic, image, audio)

Data Preprocessing

Techniques for handling low signal-to-noise data

Preparation of models (MLPs, CNNs, ViTs, RNNs)

Attribution Techniques

Gradient-based saliency

Strengths and limitations

Scalability considerations

DeepLift

Performance evaluation

Integrated Gradients

Comparison with other methods

Feature Ablation

Effectiveness in different domains

Recursive Feature Elimination (RFE) extension for neural networks (RFEwNA)

Novel contribution and empirical evidence

Evaluation Metrics

Uniform Score

Functional Precision

Performance comparison across modalities and noise types

Results and Findings

Gradient-based saliency effectiveness and scalability

Model generalization and attribution method correlation

RFEwNA's impact on feature selection

Performance variations across MLPs, CNNs, ViTs, and RNNs

Saliency Maps (SA) in symbolic data and limitations in image models

Noise type influence on attribution methods

Discussion

Interpretation of key findings

Limitations and future research directions

The need for diverse studies on attribution techniques and model configurations

Conclusion

Summary of main contributions

Implications for explainable AI in low SNR environments

Recommendations for future research in the field

Basic info

papers

machine learning

artificial intelligence

Advanced features