Analysis and Visualization of Linguistic Structures in Large Language Models: Neural Representations of Verb-Particle Constructions in BERT

Hassane Kissane, Achim Schilling, Patrick Krauss·December 19, 2024

Summary

The study by Hassane Kissane, Achim Schilling, and Patrick Kraus examines neural representations of verb-particle constructions in BERT, focusing on linguistic structures within large language models. Researchers from the Department of English and American Studies, Neuroscience Lab, and Pattern Recognition Lab at the University of Erlangen-Nuremberg investigate how BERT processes verb-particle combinations. This study analyzes transformer-based large language models' internal representations of verb-particle combinations, focusing on BERT's ability to capture lexical and syntactic nuances across different layers. Results indicate BERT's middle layers effectively capture syntactic structures, with varying representational accuracy across verb categories. This research enhances our understanding of deep learning models' linguistic processing and suggests a complex interplay between network architecture and linguistic representation.

Introduction

Background

Overview of neural language models and their role in processing linguistic structures

Introduction to BERT (Bidirectional Encoder Representations from Transformers) and its significance in natural language processing

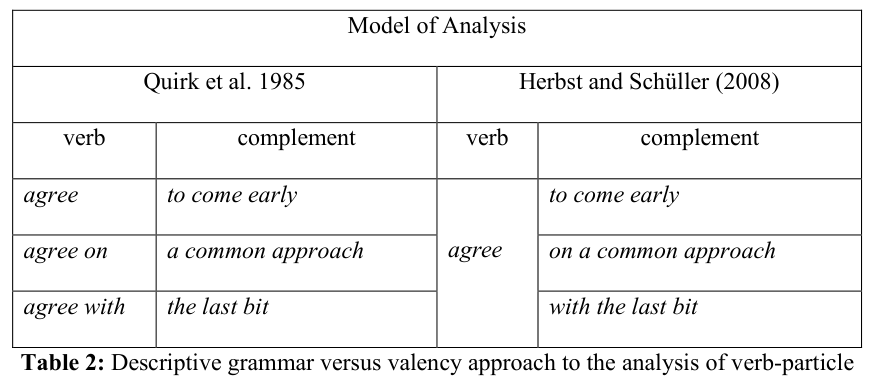

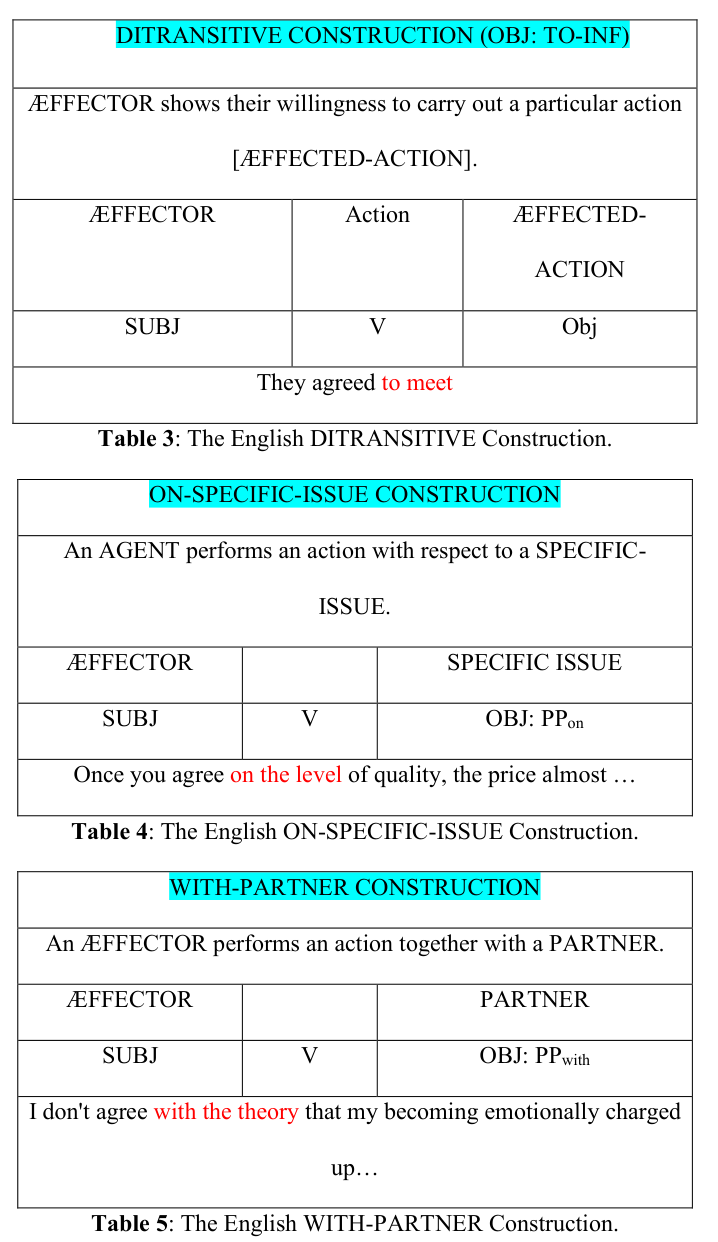

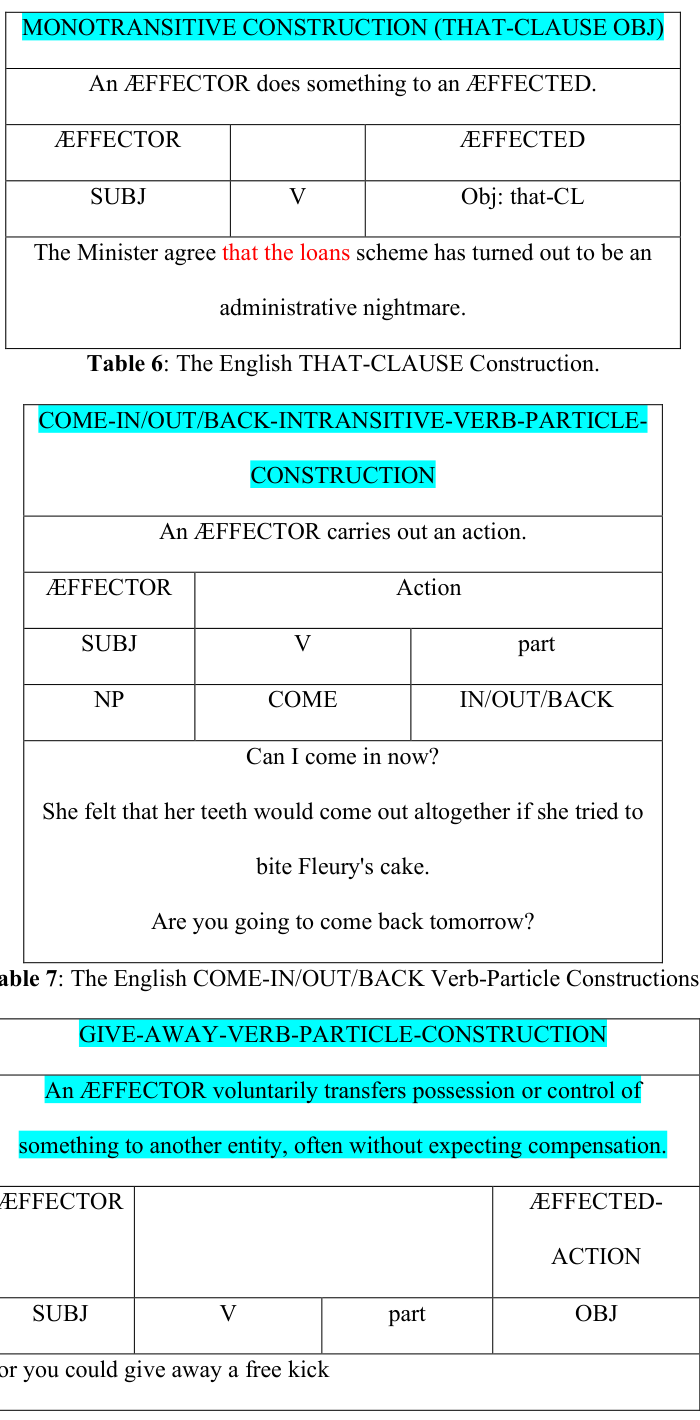

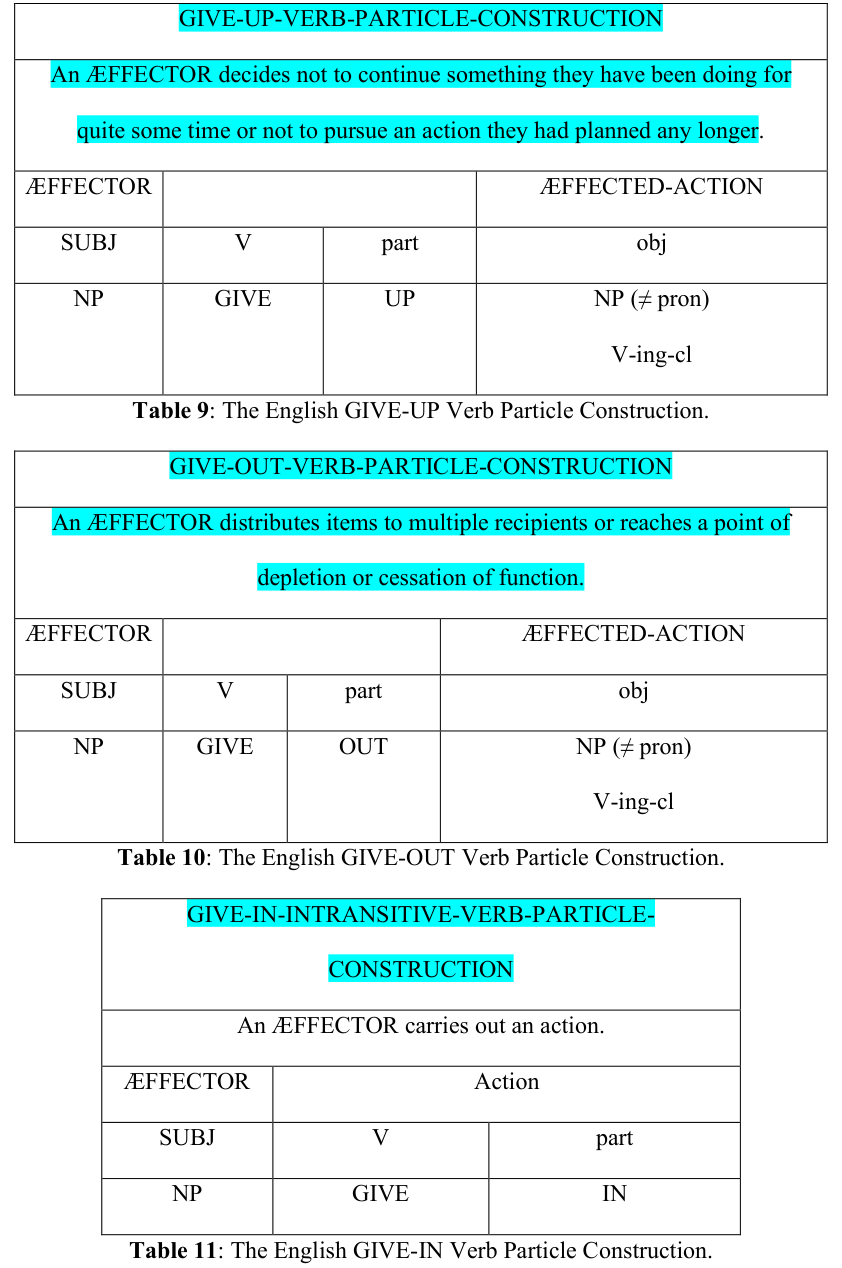

Context of the study: the importance of verb-particle constructions in linguistic analysis and their representation in large language models

Objective

The aim of the research: to investigate how BERT processes verb-particle combinations and captures lexical and syntactic nuances

The focus on transformer-based models and their ability to represent complex linguistic features across different layers

Method

Data Collection

Description of the dataset used for the study, including the selection criteria for verb-particle combinations

Explanation of the process for collecting linguistic data for analysis

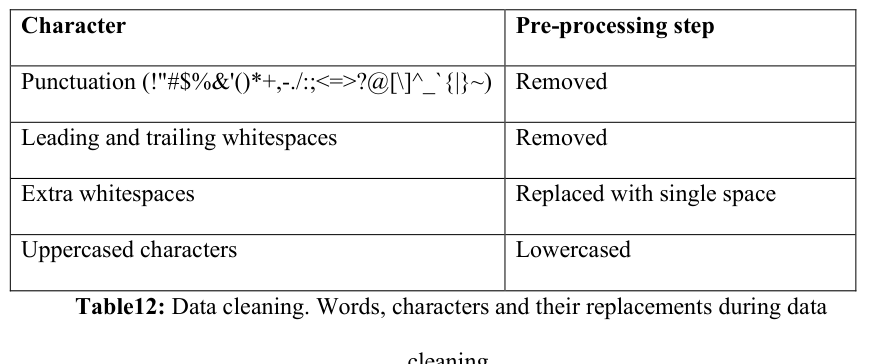

Data Preprocessing

Techniques applied to the collected data to prepare it for BERT's input

Discussion on the importance of preprocessing in ensuring the accuracy of the model's representation

Model Application

Detailed explanation of how BERT was used to process the verb-particle combinations

Analysis of the model's performance across different layers in capturing syntactic structures and lexical nuances

Evaluation Metrics

Description of the metrics used to assess the representational accuracy of BERT's internal representations

Explanation of how these metrics contribute to understanding the model's linguistic processing capabilities

Results

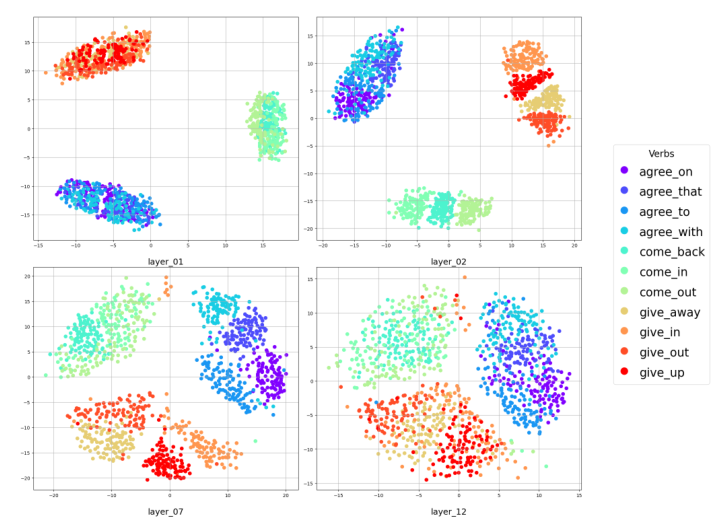

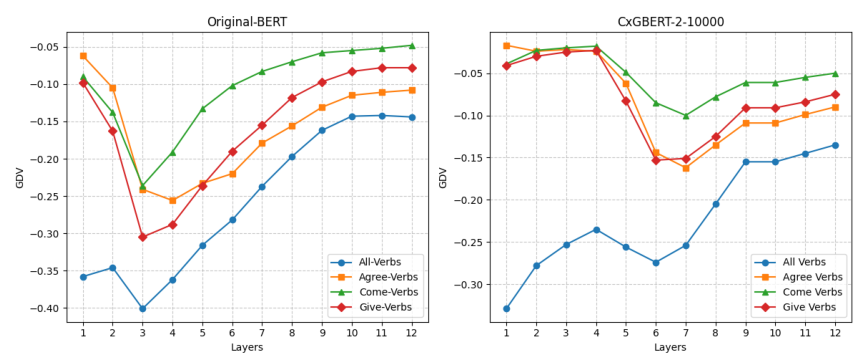

Layer Analysis

Presentation of findings on how BERT's middle layers effectively capture syntactic structures

Discussion on the representational accuracy across different verb categories and the implications for linguistic representation

Comparative Analysis

Comparison of BERT's performance with other models or methods in processing verb-particle constructions

Insights into the unique advantages and limitations of using transformer-based models for linguistic analysis

Discussion

Architectural Implications

Examination of the complex interplay between network architecture and linguistic representation in BERT

Analysis of how architectural decisions influence the model's ability to capture linguistic nuances

Theoretical Contributions

Discussion on the broader implications of the study for the field of computational linguistics and natural language processing

Insights into how the findings can inform future research and model development

Conclusion

Summary of Findings

Recap of the key results and their significance in understanding BERT's linguistic processing capabilities

Future Directions

Suggestions for further research to explore the limits and potential improvements of BERT and similar models in linguistic representation

Consideration of how the study's findings can be applied to enhance language understanding and processing in real-world applications

Basic info

papers

computation and language

artificial intelligence

Advanced features

Insights

What is the main focus of the study by Hassane Kissane, Achim Schilling, and Patrick Kraus?

What does this study analyze in relation to transformer-based large language models like BERT?

Who are the researchers involved in this study on neural representations of verb-particle constructions in BERT?

How do the results of this study contribute to our understanding of deep learning models' linguistic processing capabilities?