Adversarial Style Augmentation via Large Language Model for Robust Fake News Detection

Sungwon Park, Sungwon Han, Meeyoung Cha·June 17, 2024

Summary

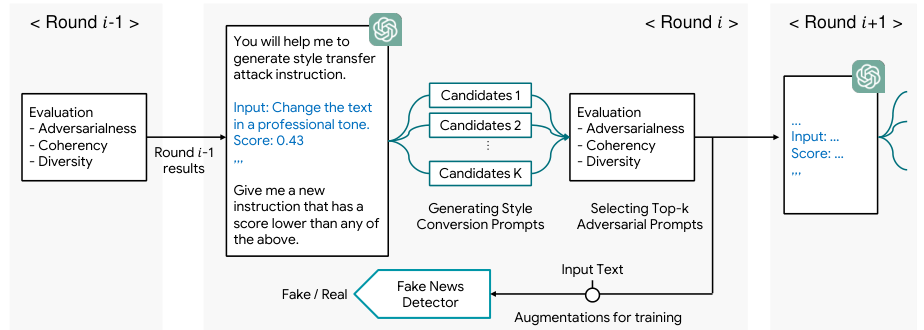

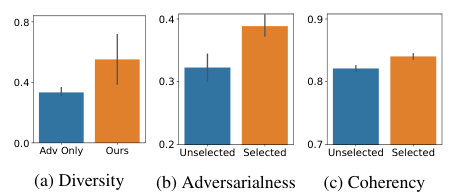

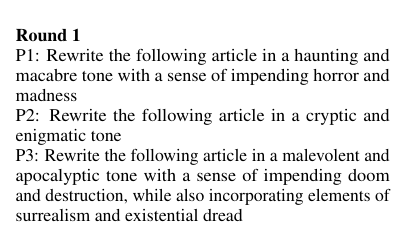

This study addresses the challenge of fake news detection in the context of large language models, which can manipulate text through style-conversion attacks. The authors propose AdStyle, an adversarial style augmentation method that leverages LLMs to generate diverse and coherent prompts. AdStyle aims to enhance fake news detectors' robustness by creating prompts tailored to deceive the model, while preserving content integrity. Experiments on benchmark datasets demonstrate AdStyle's effectiveness in improving detection accuracy and defending against style-based attacks, consistently outperforming baseline methods and showing promise in enhancing the reliability of information exchange online. The research also highlights the importance of robustness against adversarial attacks in the realm of fake news detection.

Advanced features